[ad_1]

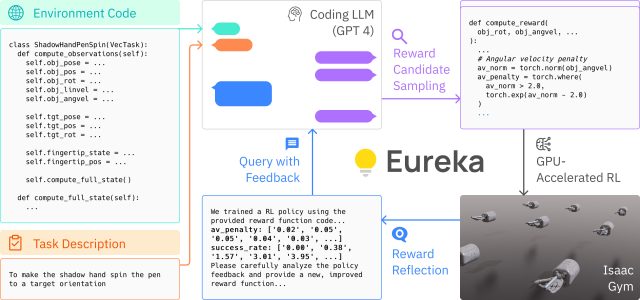

On Friday, researchers from Nvidia, UPenn, Caltech, and the College of Texas at Austin introduced Eureka, an algorithm that makes use of OpenAI’s GPT-4 language mannequin for designing coaching targets (referred to as “reward features”) to reinforce robotic dexterity. The work goals to bridge the hole between high-level reasoning and low-level motor management, permitting robots to be taught complicated duties quickly utilizing massively parallel simulations that run by means of trials concurrently. In keeping with the crew, Eureka outperforms human-written reward features by a considerable margin.

Earlier than robots can work together with the actual world efficiently, they should discover ways to transfer their robotic our bodies to realize targets—like selecting up objects or transferring. As a substitute of creating a bodily robotic attempt to fail one process at a time to be taught in a lab, researchers at Nvidia have been experimenting with utilizing video game-like laptop worlds (because of platforms referred to as Isaac Sim and Isaac Gym) that simulate three-dimensional physics. These permit for massively parallel coaching classes to happen in lots of digital worlds without delay, dramatically dashing up coaching time.

“Leveraging state-of-the-art GPU-accelerated simulation in Nvidia Isaac Health club,” writes Nvidia on its demonstration page, “Eureka is ready to shortly consider the standard of a giant batch of reward candidates, enabling scalable search within the reward operate house.” They name it “fast reward analysis by way of massively parallel reinforcement learning.”

The researchers describe Eureka as a “hybrid-gradient structure,” which primarily signifies that it’s a mix of two totally different studying fashions. A low-level neural community devoted to robotic motor management takes directions from a high-level, inference-only massive language mannequin (LLM) like GPT-4. The structure employs two loops: an outer loop utilizing GPT-4 for refining the reward operate, and an interior loop for reinforcement studying to coach the robotic’s management system.

The analysis is detailed in a brand new preprint research paper titled, “Eureka: Human-Stage Reward Design by way of Coding Massive Language Fashions.” Authors Jason Ma, William Liang, Guanzhi Wang, De-An Huang, Osbert Bastani, Dinesh Jayaraman, Yuke Zhu, Linxi “Jim” Fan, and Anima Anandkumar used the aforementioned Isaac Health club, a GPU-accelerated physics simulator, to reportedly velocity up the bodily coaching course of by an element of 1,000. Within the paper’s summary, the authors declare that Eureka outperformed knowledgeable human-engineered rewards in 83 p.c of a benchmark suite of 29 duties throughout 10 totally different robots, bettering efficiency by a median of 52 p.c.

Moreover, Eureka introduces a novel type of reinforcement studying from human suggestions (RLHF), permitting a human operator’s pure language suggestions to affect the reward operate. This might function a “highly effective co-pilot” for engineers designing subtle motor behaviors for robots, in line with an X post by Nvidia AI researcher Fan, who’s a listed creator on the Eureka analysis paper. One shocking achievement, Fan says, is that Eureka enabled robots to carry out pen-spinning tips, a ability that’s tough even for CGI artists to animate.

So what does all of it imply? Sooner or later, instructing robots new tips will probably come at accelerated velocity because of massively parallel simulations, with just a little assist from AI fashions that may oversee the coaching course of. The most recent work is adjoining to earlier experiments utilizing language fashions to manage robots from Microsoft and Google.

On X, Shital Shah, a principal analysis engineer at Microsoft Analysis, wrote that the Eureka strategy seems to be a key step towards realizing the complete potential of reinforcement studying: “The proverbial optimistic suggestions loop of self-improvement could be simply across the nook that permits us to transcend human coaching knowledge and capabilities.”

The Eureka crew has made its analysis and code base publicly accessible for additional experimentation and for future researchers to construct off of. The paper may be accessed on arXiv, and the code is offered on GitHub.

[ad_2]

Source link