Secure Diffusion

On Tuesday, OpenAI published a brand new analysis paper detailing a method that makes use of its GPT-4 language mannequin to jot down explanations for the habits of neurons in its older GPT-2 mannequin, albeit imperfectly. It is a step ahead for “interpretability,” which is a discipline of AI that seeks to elucidate why neural networks create the outputs they do.

Whereas massive language fashions (LLMs) are conquering the tech world, AI researchers nonetheless do not know lots about their performance and capabilities underneath the hood. Within the first sentence of OpenAI’s paper, the authors write, “Language fashions have turn into extra succesful and extra extensively deployed, however we don’t perceive how they work.”

For outsiders, that probably feels like a surprising admission from an organization that not solely is determined by income from LLMs but in addition hopes to accelerate them to beyond-human ranges of reasoning means.

However this property of “not realizing” precisely how a neural community’s particular person neurons work collectively to provide its outputs has a well known title: the black box. You feed the community inputs (like a query), and also you get outputs (like a solution), however no matter occurs in between (contained in the “black field”) is a thriller.

In an try to peek contained in the black field, researchers at OpenAI utilized its GPT-4 language mannequin to generate and consider pure language explanations for the habits of neurons in a vastly much less complicated language mannequin, equivalent to GPT-2. Ideally, having an interpretable AI mannequin would assist contribute to the broader aim of what some folks name “AI alignment,” making certain that AI techniques behave as supposed and mirror human values. And by automating the interpretation course of, OpenAI seeks to beat the constraints of conventional handbook human inspection, which isn’t scalable for bigger neural networks with billions of parameters.

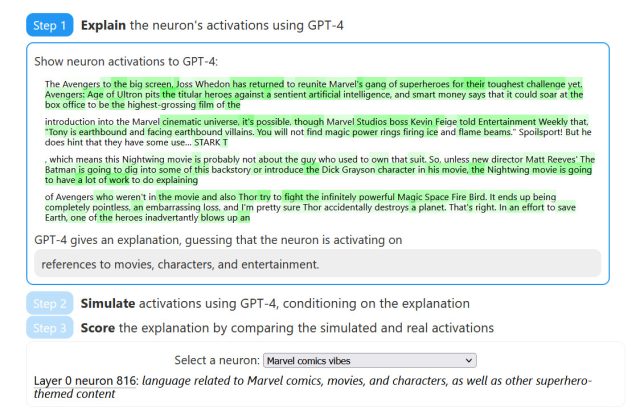

OpenAI’s approach “seeks to elucidate what patterns in textual content trigger a neuron to activate.” Its methodology consists of three steps:

- Clarify the neuron’s activations utilizing GPT-4

- Simulate neuron activation habits utilizing GPT-4

- Examine the simulated activations with actual activations.

To know how OpenAI’s methodology works, you could know a number of phrases: neuron, circuit, and a focus head. In a neural community, a neuron is sort of a tiny decision-making unit that takes in info, processes it, and produces an output, similar to a tiny mind cell making a choice based mostly on the alerts it receives. A circuit in a neural community is sort of a community of interconnected neurons that work collectively, passing info and making selections collectively, much like a gaggle of individuals collaborating and speaking to unravel an issue. And an consideration head is sort of a highlight that helps a language mannequin pay nearer consideration to particular phrases or components of a sentence, permitting it to higher perceive and seize essential info whereas processing textual content.

By figuring out particular neurons and a focus heads throughout the mannequin that should be interpreted, GPT-4 creates human-readable explanations for the perform or function of those elements. It additionally generates a proof rating, which OpenAI calls “a measure of a language mannequin’s means to compress and reconstruct neuron activations utilizing pure language.” The researchers hope that the quantifiable nature of the scoring system will permit measurable progress towards making neural community computations comprehensible to people.

So how properly does it work? Proper now, not that nice. Throughout testing, OpenAI pitted its approach in opposition to a human contractor that carried out related evaluations manually, they usually discovered that each GPT-4 and the human contractor “scored poorly in absolute phrases,” that means that decoding neurons is troublesome.

One clarification put forth by OpenAI for this failure is that neurons could also be “polysemantic,” which implies that the everyday neuron within the context of the examine might exhibit a number of meanings or be related to a number of ideas. In a piece on limitations, OpenAI researchers talk about each polysemantic neurons and likewise “alien options” as limitations of their methodology:

Moreover, language fashions might signify alien ideas that people do not have phrases for. This might occur as a result of language fashions care about various things, e.g. statistical constructs helpful for next-token prediction duties, or as a result of the mannequin has found pure abstractions that people have but to find, e.g. some household of analogous ideas in disparate domains.

Different limitations embrace being compute-intensive and solely offering quick pure language explanations. However OpenAI researchers are nonetheless optimistic that they’ve created a framework for each machine-meditated interpretability and the quantifiable technique of measuring enhancements in interpretability as they enhance their methods sooner or later. As AI fashions turn into extra superior, OpenAI researchers hope that the standard of the generated explanations will enhance, providing higher insights into the interior workings of those complicated techniques.

OpenAI has revealed its analysis paper on an interactive website that accommodates instance breakdowns of every step, exhibiting highlighted parts of the textual content and the way they correspond to certain neurons. Moreover. OpenAI has supplied “Automated interpretability” code and its GPT-2 XL neurons and explanations datasets on GitHub.

In the event that they ever determine precisely why ChatGPT makes things up, the entire effort might be properly value it.