[ad_1]

On Wednesday, Google launched PaLM 2, a household of foundational language fashions corresponding to OpenAI’s GPT-4. At its Google I/O occasion in Mountain View, Google revealed that it’s already utilizing PaLM 2 to energy 25 totally different merchandise, together with its Bard conversational AI assistant.

As a household of enormous language fashions (LLMs), PaLM 2 has been skilled on an unlimited quantity of knowledge and does next-word prediction, which outputs the probably textual content after a immediate enter by people. PaLM stands for “Pathways Language Mannequin,” and in flip, “Pathways” is a machine-learning approach created at Google. PaLM 2 follows-up on the original PaLM, which Google introduced in April 2022.

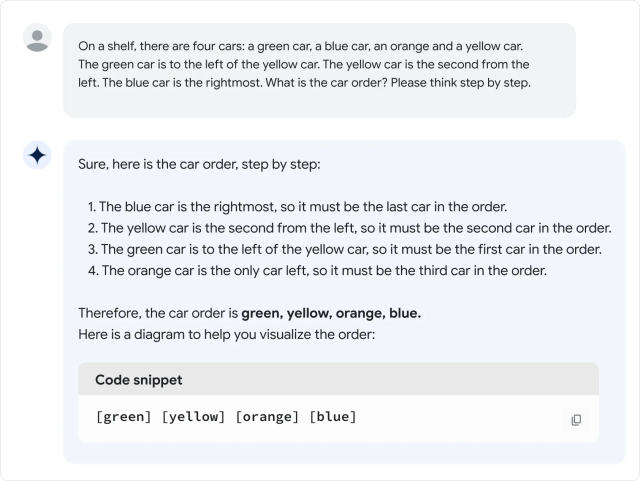

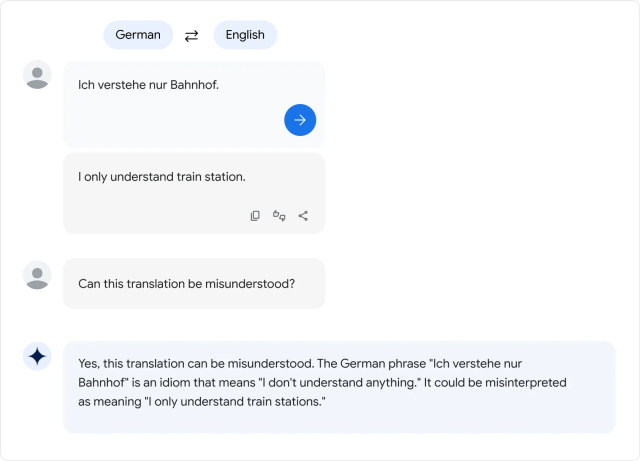

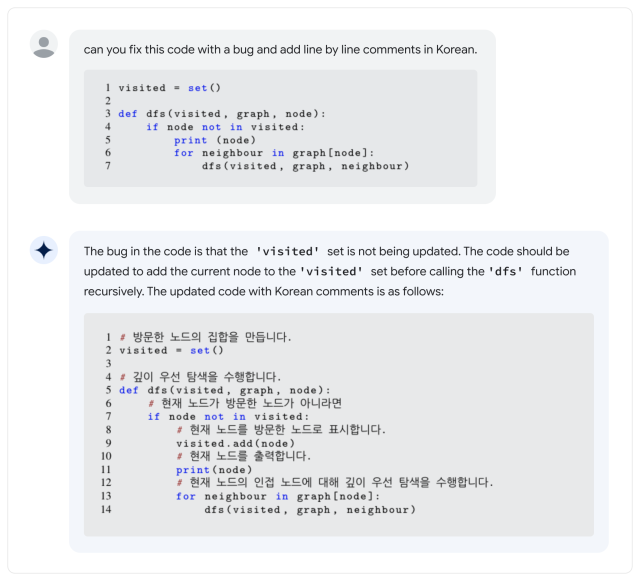

Based on Google, PaLM 2 helps over 100 languages and may carry out “reasoning,” code technology, and multi-lingual translation. Throughout his 2023 Google I/O keynote, Google CEO Sundar Pichai stated that PaLM 2 is available in 4 sizes: Gecko, Otter, Bison, Unicorn. Gecko is the smallest and may reportedly run on a cellular machine. Apart from Bard, PaLM 2 is behind AI options in Docs, Sheets, and Slides.

All that’s superb and properly, however how does PaLM 2 stack as much as GPT-4? Within the PaLM 2 Technical Report, PaLM 2 appears to beat GPT-4 in some mathematical, translation, and reasoning duties. However actuality won’t match Google’s benchmarks. In a cursory analysis of the PaLM 2 model of Bard by Ethan Mollick, a Wharton professor who typically writes about AI, Mollick finds that PaLM 2’s efficiency seems worse than GPT-4 and Bing on quite a lot of casual language assessments, which he detailed in a Twitter thread.

Till not too long ago, the PaLM household of language fashions has been an inner Google Analysis product with no shopper publicity, however Google started offering limited API access in March. Nonetheless, the primary PaLM was notable for its large measurement: 540 billion parameters. Parameters are numerical variables that function the realized “information” of the mannequin, enabling it to make predictions and generate textual content based mostly on the enter it receives.

Extra parameters roughly means extra complexity, however there is no assure they’re utilized in an environment friendly method. By comparability, OpenAI’s GPT-3 (from 2020) has 175 billion parameters. OpenAI has by no means disclosed the variety of parameters in GPT-4.

In order that results in the massive query: Simply how “giant” is PaLM 2 by way of parameter rely? Google doesn’t say, and that has pissed off some industry experts who typically combat for extra transparency in what makes AI fashions tick.

That is not the one property of PaLM 2 that Google has been quiet about. The corporate says that PaLM 2 has been skilled on “a various set of sources: internet paperwork, books, code, arithmetic, and conversational knowledge,” however doesn’t go into element about what precisely that knowledge is.

As with different giant language mannequin datasets, the PaLM 2 dataset seemingly consists of all kinds of copyrighted material used with out permission and likewise doubtlessly dangerous materials scraped from the Web. Coaching knowledge decisively influences the output of any AI mannequin, so some specialists have been advocating using open datasets that may present alternatives for scientific reproducibility and moral scrutiny.

“Now that LLMs are merchandise (not simply analysis), we’re at a turning level: for-profit corporations will develop into much less and fewer clear *particularly* in regards to the parts which might be most vital,” tweeted Jesse Dodge, a analysis scientist on the Allen Institute of AI. “Provided that the open supply group can manage collectively can we sustain!”

Up to now, criticism of hiding its secret sauce hasn’t stopped Google from pursuing extensive deployment of AI fashions, regardless of a bent in all LLMs to simply make things up out of skinny air. Throughout Google I/O, firm reps demoed AI options in many of its major products, which suggests a broad swath of the general public could possibly be battling AI confabulations quickly.

And so far as LLMs go, PaLM 2 is way from the top of the story: within the I/O keynote, Pichai talked about {that a} newer multimodal AI mannequin referred to as “Gemini” was at present in coaching. Because the race for AI dominance continues, Google customers within the US and 180 other countries (oddly excluding Canada and mainland Europe) can try PaLM 2 themselves as a part of Google Bard, the experimental AI assistant.

[ad_2]

Source link