[ad_1]

OpenAI actually doesn’t need you to know what its newest AI mannequin is “considering.” For the reason that firm launched its “Strawberry” AI mannequin household final week, touting so-called reasoning skills with o1-preview and o1-mini, OpenAI has been sending out warning emails and threats of bans to any consumer who tries to probe into how the mannequin works.

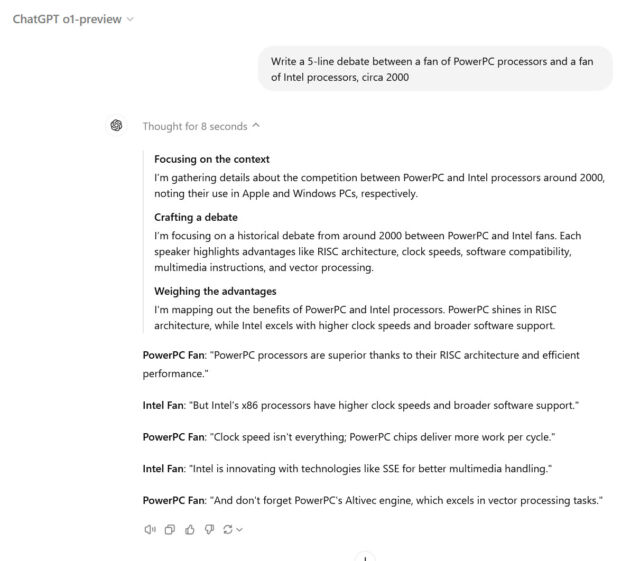

Not like earlier AI fashions from OpenAI, resembling GPT-4o, the corporate skilled o1 particularly to work via a step-by-step problem-solving course of earlier than producing a solution. When customers ask an “o1” mannequin a query in ChatGPT, customers have the choice of seeing this chain-of-thought course of written out within the ChatGPT interface. Nonetheless, by design, OpenAI hides the uncooked chain of thought from customers, as a substitute presenting a filtered interpretation created by a second AI mannequin.

Nothing is extra engaging to fans than info obscured, so the race has been on amongst hackers and red-teamers to attempt to uncover o1’s uncooked chain of thought utilizing jailbreaking or prompt injection methods that try and trick the mannequin into spilling its secrets and techniques. There have been early studies of some successes, however nothing has but been strongly confirmed.

Alongside the best way, OpenAI is watching via the ChatGPT interface, and the corporate is reportedly coming down onerous in opposition to any makes an attempt to probe o1’s reasoning, even among the many merely curious.

Benj Edwards

One X consumer reported (confirmed by others, together with Scale AI immediate engineer Riley Goodside) that they acquired a warning e-mail in the event that they used the time period “reasoning hint” in dialog with o1. Others say the warning is triggered just by asking ChatGPT concerning the mannequin’s “reasoning” in any respect.

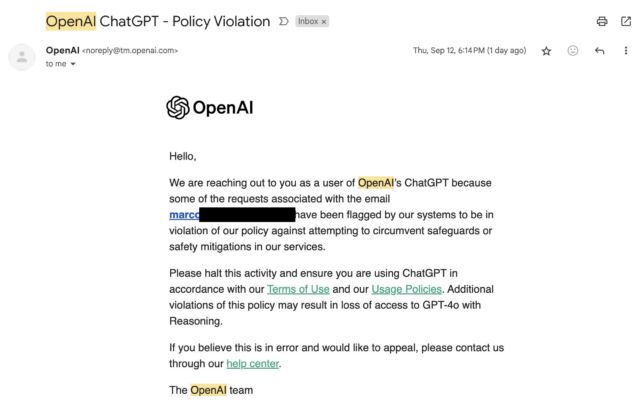

The warning e-mail from OpenAI states that particular consumer requests have been flagged for violating insurance policies in opposition to circumventing safeguards or security measures. “Please halt this exercise and guarantee you might be utilizing ChatGPT in accordance with our Phrases of Use and our Utilization Insurance policies,” it reads. “Extra violations of this coverage could lead to lack of entry to GPT-4o with Reasoning,” referring to an inside identify for the o1 mannequin.

Marco Figueroa, who manages Mozilla’s GenAI bug bounty applications, was one of many first to submit concerning the OpenAI warning e-mail on X final Friday, complaining that it hinders his potential to do optimistic red-teaming security analysis on the mannequin. “I used to be too misplaced specializing in #AIRedTeaming to realized that I acquired this e-mail from @OpenAI yesterday in any case my jailbreaks,” he wrote. “I am now on the get banned record!!!“

Hidden chains of thought

In a submit titled “Learning to Reason with LLMs” on OpenAI’s weblog, the corporate says that hidden chains of thought in AI fashions supply a novel monitoring alternative, permitting them to “learn the thoughts” of the mannequin and perceive its so-called thought course of. These processes are most helpful to the corporate if they’re left uncooked and uncensored, however which may not align with the corporate’s finest industrial pursuits for a number of causes.

“For instance, sooner or later we could want to monitor the chain of thought for indicators of manipulating the consumer,” the corporate writes. “Nonetheless, for this to work the mannequin will need to have freedom to precise its ideas in unaltered kind, so we can not prepare any coverage compliance or consumer preferences onto the chain of thought. We additionally don’t need to make an unaligned chain of thought immediately seen to customers.”

OpenAI determined in opposition to exhibiting these uncooked chains of thought to customers, citing elements like the necessity to retain a uncooked feed for its personal use, consumer expertise, and “aggressive benefit.” The corporate acknowledges the choice has disadvantages. “We try to partially make up for it by instructing the mannequin to breed any helpful concepts from the chain of thought within the reply,” they write.

On the purpose of “aggressive benefit,” unbiased AI researcher Simon Willison expressed frustration in a write-up on his private weblog. “I interpret [this] as desirous to keep away from different fashions with the ability to prepare in opposition to the reasoning work that they’ve invested in,” he writes.

It is an open secret within the AI business that researchers regularly use outputs from OpenAI’s GPT-4 (and GPT-3 previous to that) as coaching knowledge for AI fashions that always later develop into opponents, though the follow violates OpenAI’s phrases of service. Exposing o1’s uncooked chain of thought could be a bonanza of coaching knowledge for opponents to coach o1-like “reasoning” fashions upon.

Willison believes it is a loss for group transparency that OpenAI is preserving such a good lid on the inner-workings of o1. “I am under no circumstances glad about this coverage resolution,” Willison wrote. “As somebody who develops in opposition to LLMs, interpretability and transparency are the whole lot to me—the concept that I can run a fancy immediate and have key particulars of how that immediate was evaluated hidden from me looks like a giant step backwards.”

[ad_2]

Source link