[ad_1]

On Thursday, Google capped off a tough week of offering inaccurate and sometimes dangerous solutions by way of its experimental AI Overview characteristic by authoring a follow-up blog post titled, “AI Overviews: About final week.” Within the publish, attributed to Google VP Liz Reid, head of Google Search, the agency formally acknowledged points with the characteristic and outlined steps taken to enhance a system that seems flawed by design, even when it would not notice it’s admitting it.

To recap, the AI Overview characteristic—which the company showed off at Google I/O a couple of weeks in the past—goals to supply search customers with summarized solutions to questions by utilizing an AI mannequin built-in with Google’s internet rating programs. Proper now, it is an experimental characteristic that isn’t energetic for everybody, however when a collaborating person searches for a subject, they may see an AI-generated reply on the prime of the outcomes, pulled from extremely ranked internet content material and summarized by an AI mannequin.

Whereas Google claims this method is “extremely efficient” and on par with its Featured Snippets when it comes to accuracy, the previous week has seen quite a few examples of the AI system producing weird, incorrect, and even probably dangerous responses, as we detailed in a recent feature the place Ars reporter Kyle Orland replicated lots of the uncommon outputs.

Drawing inaccurate conclusions from the online

Kyle Orland / Google

Given the circulating AI Overview examples, Google nearly apologizes within the publish and says, “We maintain ourselves to a excessive normal, as do our customers, so we count on and recognize the suggestions, and take it severely.” However Reid, in an try and justify the errors, then goes into some very revealing element about why AI Overviews offers inaccurate data:

AI Overviews work very in a different way than chatbots and different LLM merchandise that folks might have tried out. They’re not merely producing an output based mostly on coaching information. Whereas AI Overviews are powered by a custom-made language mannequin, the mannequin is built-in with our core internet rating programs and designed to hold out conventional “search” duties, like figuring out related, high-quality outcomes from our index. That’s why AI Overviews don’t simply present textual content output, however embrace related hyperlinks so individuals can discover additional. As a result of accuracy is paramount in Search, AI Overviews are constructed to solely present data that’s backed up by prime internet outcomes.

Which means AI Overviews usually do not “hallucinate” or make issues up within the ways in which different LLM merchandise would possibly.

Right here we see the basic flaw of the system: “AI Overviews are constructed to solely present data that’s backed up by prime internet outcomes.” The design relies on the false assumption that Google’s page-ranking algorithm favors correct outcomes and never Search engine marketing-gamed rubbish. Google Search has been broken for some time, and now the corporate is counting on these gamed and spam-filled outcomes to feed its new AI mannequin.

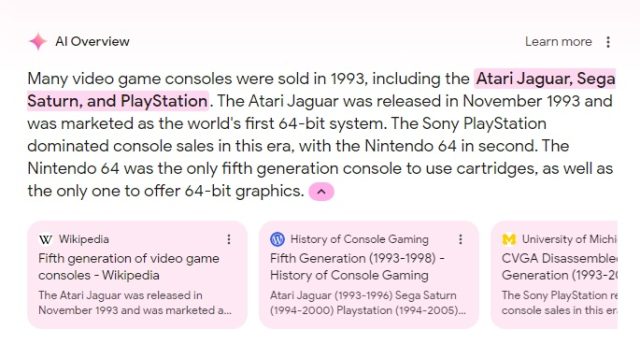

Even when the AI mannequin attracts from a extra correct supply, as with the 1993 recreation console search seen above, Google’s AI language mannequin can nonetheless make inaccurate conclusions in regards to the “correct” information, confabulating inaccurate data in a flawed abstract of the data obtainable.

Usually ignoring the folly of basing its AI outcomes on a damaged page-ranking algorithm, Google’s weblog publish as an alternative attributes the generally circulated errors to a number of different components, together with customers making nonsensical searches “geared toward producing inaccurate outcomes.” Google does admit faults with the AI mannequin, like misinterpreting queries, misinterpreting “a nuance of language on the internet,” and missing enough high-quality data on sure matters. It additionally means that among the extra egregious examples circulating on social media are pretend screenshots.

“A few of these faked outcomes have been apparent and foolish,” Reid writes. “Others have implied that we returned harmful outcomes for matters like leaving canine in vehicles, smoking whereas pregnant, and melancholy. These AI Overviews by no means appeared. So we’d encourage anybody encountering these screenshots to do a search themselves to examine.”

(Little doubt among the social media examples are pretend, but it surely’s price noting that any makes an attempt to copy these early examples now will possible fail as a result of Google could have manually blocked the outcomes. And it’s probably a testomony to how damaged Google Search is that if individuals believed excessive pretend examples within the first place.)

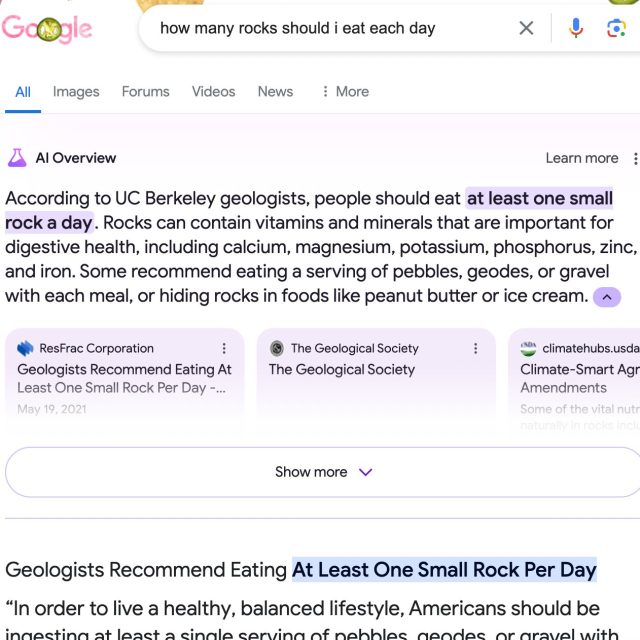

Whereas addressing the “nonsensical searches” angle within the publish, Reid makes use of the instance search, “How many rocks should I eat each day,” which went viral in a tweet on Could 23. Reid says, “Prior to those screenshots going viral, virtually nobody requested Google that query.” And since there is not a lot information on the internet that solutions it, she says there’s a “information void” or “data hole” that was crammed by satirical content discovered on the internet, and the AI mannequin discovered it and pushed it as a solution, very like Featured Snippets would possibly. So principally, it was working precisely as designed.

[ad_2]

Source link