[ad_1]

On Thursday, Meta unveiled early variations of its Llama 3 open-weights AI mannequin that can be utilized to energy textual content composition, code era, or chatbots. It additionally introduced that its Meta AI Assistant is now available on a website and goes to be built-in into its main social media apps, intensifying the corporate’s efforts to place its merchandise towards different AI assistants like OpenAI’s ChatGPT, Microsoft’s Copilot, and Google’s Gemini.

Like its predecessor, Llama 2, Llama 3 is notable for being a freely accessible, open-weights massive language mannequin (LLM) supplied by a serious AI firm. Llama 3 technically doesn’t high quality as “open supply” as a result of that time period has a specific meaning in software program (as we have now talked about in other coverage), and the trade has not but settled on terminology for AI mannequin releases that ship both code or weights with restrictions (you may learn Llama 3’s license here) or that ship with out offering coaching knowledge. We usually name these releases “open weights” as a substitute.

For the time being, Llama 3 is offered in two parameter sizes: 8 billion (8B) and 70 billion (70B), each of which can be found as free downloads via Meta’s web site with a sign-up. Llama 3 is available in two variations: pre-trained (principally the uncooked, next-token-prediction mannequin) and instruction-tuned (fine-tuned to observe person directions). Every has a 8,192 token context restrict.

Benj Edwards

Meta skilled each fashions on two custom-built, 24,000-GPU clusters. In a podcast interview with Dwarkesh Patel, Meta CEO Mark Zuckerberg mentioned that the corporate skilled the 70B mannequin with round 15 trillion tokens of information. All through the method, the mannequin by no means reached “saturation” (that’s, it by no means hit a wall by way of functionality will increase). Finally, Meta pulled the plug and moved on to coaching different fashions.

“I suppose our prediction entering into was that it was going to asymptote extra, however even by the top it was nonetheless leaning. We in all probability might have fed it extra tokens, and it could have gotten considerably higher,” Zuckerberg mentioned on the podcast.

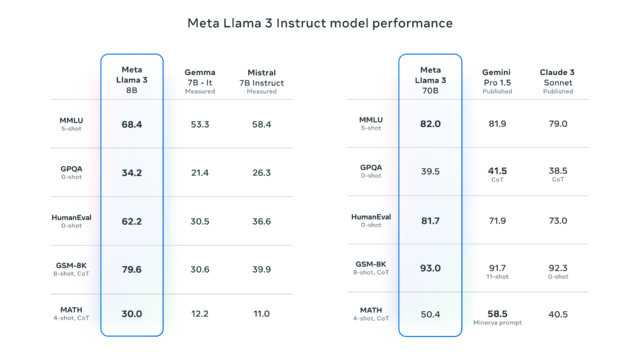

Meta additionally introduced that it’s at the moment coaching a 400B parameter model of Llama 3, which some consultants like Nvidia’s Jim Fan assume could perform in the same league as GPT-4 Turbo, Claude 3 Opus, and Gemini Extremely on benchmarks like MMLU, GPQA, HumanEval, and MATH.

Talking of benchmarks, we have now devoted many words previously to explaining how frustratingly imprecise benchmarks could be when utilized to massive language fashions attributable to points like coaching contamination (that’s, together with benchmark check questions within the coaching dataset), cherry-picking on the a part of distributors, and an lack of ability to seize AI’s normal usefulness in an interactive session with chat-tuned fashions.

However, as anticipated, Meta supplied some benchmarks for Llama 3 that record outcomes from MMLU (undergraduate degree data), GSM-8K (grade-school math), HumanEval (coding), GPQA (graduate-level questions), and MATH (math phrase issues). These present the 8B mannequin performing nicely in comparison with open-weights fashions like Google’s Gemma 7B and Mistral 7B Instruct, and the 70B mannequin additionally held its personal towards Gemini Pro 1.5 and Claude 3 Sonnet.

Meta says that the Llama 3 mannequin has been enhanced with capabilities to know coding (like Llama 2) and, for the primary time, has been skilled with each pictures and textual content—although it at the moment outputs solely textual content. In line with Reuters, Meta Chief Product Officer Chris Cox famous in an interview that extra advanced processing skills (like executing multi-step plans) are anticipated in future updates to Llama 3, which may even assist multimodal outputs—that’s, each textual content and pictures.

Meta plans to host the Llama 3 fashions on a variety of cloud platforms, making them accessible via AWS, Databricks, Google Cloud, and different main suppliers.

Additionally on Thursday, Meta introduced that Llama 3 will turn out to be the brand new foundation of the Meta AI digital assistant, which the corporate first announced in September. The assistant will seem prominently in search options for Fb, Instagram, WhatsApp, Messenger, and the aforementioned dedicated website that incorporates a design just like ChatGPT, together with the flexibility to generate pictures in the identical interface. The corporate additionally introduced a partnership with Google to combine real-time search outcomes into the Meta AI assistant, including to an current partnership with Microsoft’s Bing.

[ad_2]

Source link