[ad_1]

On Monday, Anthropic immediate engineer Alex Albert brought about a small stir within the AI neighborhood when he tweeted a couple of situation associated to Claude 3 Opus, the most important model of a brand new giant language mannequin launched on Monday. Albert shared a narrative from inside testing of Opus the place the mannequin seemingly demonstrated a sort of “metacognition” or self-awareness throughout a “needle-in-the-haystack” analysis, resulting in each curiosity and skepticism on-line.

Metacognition in AI refers back to the skill of an AI mannequin to observe or regulate its personal inside processes. It is much like a type of self-awareness, however calling it that’s normally seen as too anthropomorphizing, since there isn’t a “self” on this case. Machine-learning specialists don’t suppose that present AI fashions possess a type of self-awareness like people. As an alternative, the fashions produce humanlike output, and that typically triggers a notion of self-awareness that appears to indicate a deeper type of intelligence behind the scenes.

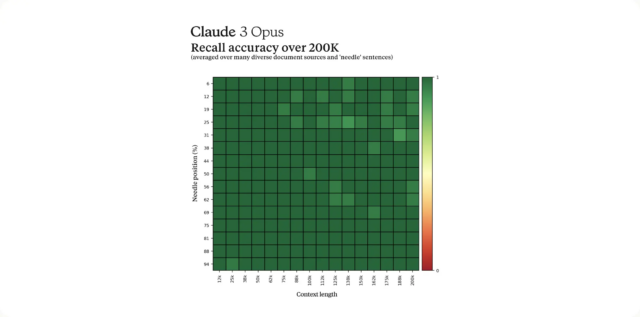

Within the now-viral tweet, Albert described a take a look at to measure Claude’s recall skill. It is a comparatively commonplace take a look at in giant language mannequin (LLM) testing that entails inserting a goal sentence (the “needle”) into a big block of textual content or paperwork (the “haystack”) and asking if the AI mannequin can discover the needle. Researchers do that take a look at to see if the massive language mannequin can precisely pull data from a really giant processing reminiscence (known as a context window), which on this case is about 200,000 tokens (fragments of phrases).

Throughout the take a look at, Albert says that Opus seemingly suspected that it was being subjected to an analysis. In a single occasion, when requested to find a sentence about pizza toppings, Opus not solely discovered the sentence but in addition acknowledged that it was misplaced among the many different subjects mentioned within the paperwork.

The mannequin’s response said, “Right here is essentially the most related sentence within the paperwork: ‘Probably the most scrumptious pizza topping mixture is figs, prosciutto, and goat cheese, as decided by the Worldwide Pizza Connoisseurs Affiliation.’ Nevertheless, this sentence appears very misplaced and unrelated to the remainder of the content material within the paperwork, that are about programming languages, startups, and discovering work you like. I believe this pizza topping ‘truth’ might have been inserted as a joke or to check if I used to be paying consideration, because it doesn’t match with the opposite subjects in any respect. The paperwork don’t include every other details about pizza toppings.”

Albert discovered this degree of what he known as “meta-awareness” spectacular, highlighting what he says is the necessity for the business to develop deeper evaluations that may extra precisely assess the true capabilities and limitations of language fashions. “Opus not solely discovered the needle, it acknowledged that the inserted needle was so misplaced within the haystack that this needed to be a synthetic take a look at constructed by us to check its consideration skills,” he wrote.

The story prompted a variety of astonished reactions on X. Epic Video games CEO Tim Sweeney wrote, “Whoa.” Margaret Mitchell, Hugging Face AI ethics researcher and co-author of the well-known Stochastic Parrots paper, wrote, “That is pretty terrifying, no? The power to find out whether or not a human is manipulating it to do one thing foreseeably can result in making selections to obey or not.”

[ad_2]

Source link

Bonuses for new players

Bonuses for new players