[ad_1]

Aurich Lawson | Getty Pictures

Greetings, pricey readers, and congratulations—we have reached the top of our four-part sequence on how Ars Technica is hosted within the cloud, and it has been a journey. We have gone by our infrastructure, our application stack, and our CI/CD strategy (that is “steady integration and steady deployment”—the method by which we handle and preserve our website’s code).

Now, to wrap issues up, we now have a little bit of a seize bag of matters to undergo. On this last half, we’ll talk about some leftover configuration particulars I did not get an opportunity to dive into in earlier components—together with how our battle-tested liveblogging system works (it is surprisingly easy, and but it has withstood tens of millions of readers hammering at it throughout Apple occasions). We’ll additionally peek at how we deal with authoritative DNS.

Lastly, we’ll shut on one thing that I have been wanting to take a look at for some time: AWS’s cloud-based 64-bit ARM service choices. How a lot of our infrastructure might we shift over onto ARM64-based methods, how a lot work will that be, and what may the long-term advantages be, each by way of efficiency and prices?

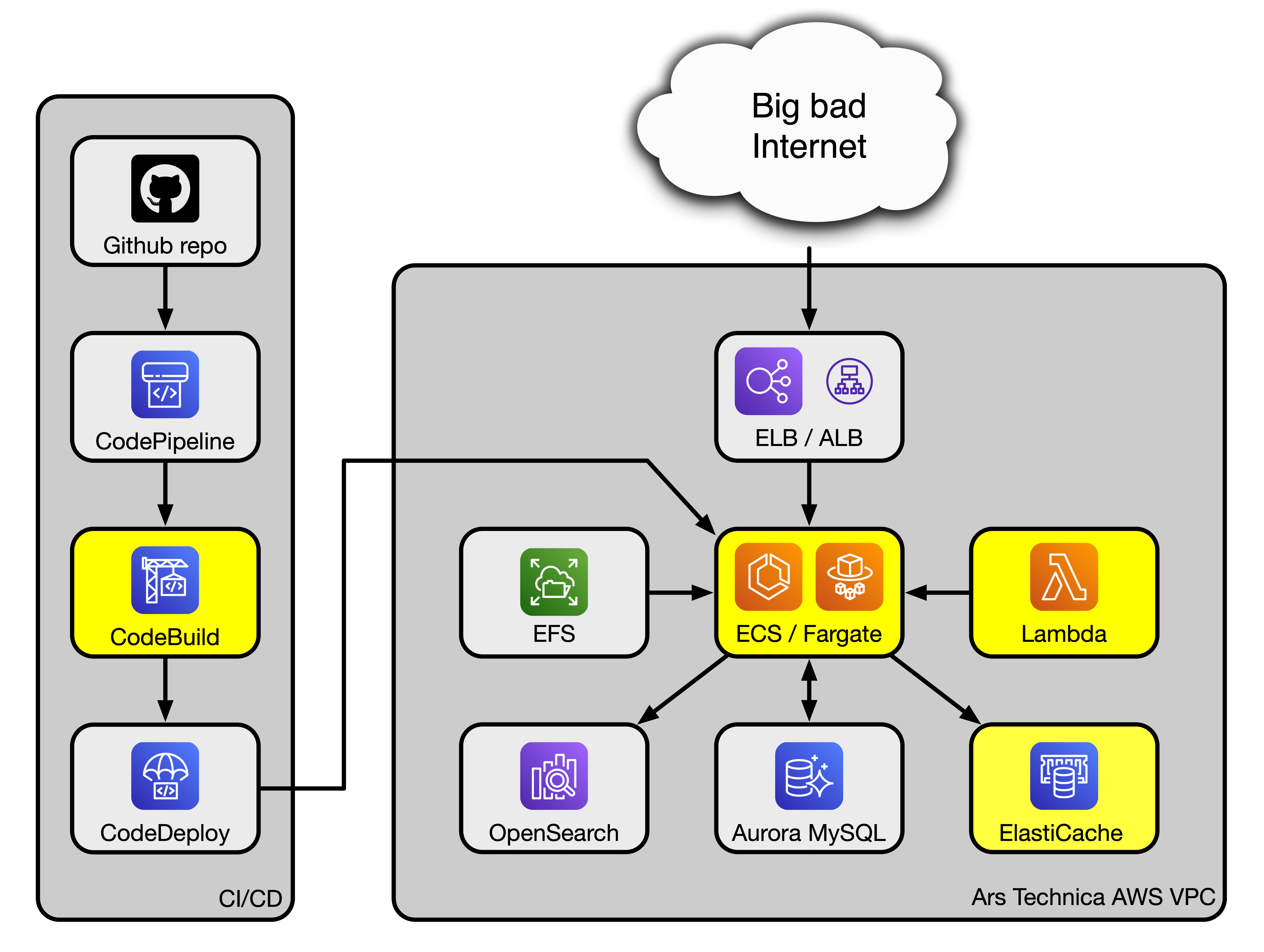

However first, as a result of I do know we now have readers who wish to skip forward, let’s re-introduce our block diagram and ensure we’re all caught up with what we’re doing immediately:

The recap: What we’ve obtained

So, recapping: Ars runs on WordPress for the entrance web page, a smaller WordPress/WooCommerce occasion for the merch store, and XenForo for the OpenForum. All of those functions dwell as containers in ECS duties (the place “process” on this case is functionally equal to a Docker host, containing a variety of providers). These duties are invoked and killed as wanted to scale the positioning up and down in response to the present quantity of customer site visitors. Numerous different elements of the stack contribute to conserving the positioning operational (like Aurora, which offers MySQL databases to the positioning, or Lambda, which we use to kick off WordPress scheduled duties, amongst different issues).

On the left facet of the diagram, we now have our CI/CD stack. The code that makes Ars work—which for us consists of issues like our WordPress PHP recordsdata, each core and plugin—lives in a personal Github repo below model management. When we have to change our code (like if there is a WordPress replace), we modify the supply within the Github repo after which, utilizing a complete set of instruments, we push these modifications out into the manufacturing net surroundings, and new duties are spun up containing these modifications. (As with the software program stack, it is somewhat extra sophisticated than that—consult part three for a extra granular description of the method!)

Eagle-eyed readers may discover there’s one thing totally different in regards to the diagram above: A number of of the providers are highlighted in yellow. Nicely-spotted—these are providers that we’d be capable of change over to run on ARM64 structure, and we’ll look at that close to the top of this text.

Colours apart, there are additionally a number of issues lacking from that diagram. In making an attempt to maintain it as high-level as potential whereas nonetheless being helpful, I omitted a complete mess of extra primary infrastructure elements—and a type of elements is DNS. It is a type of issues we will not function with out, so let’s soar in there and speak about it.

[ad_2]

Source link