[ad_1]

Getty Photographs / Benj Edwards

Lately, quite a few viral music movies from a YouTube channel referred to as There I Ruined It have included AI-generated voices of well-known musical artists singing lyrics from shocking songs. One latest instance imagines Elvis singing lyrics to Sir Combine-a-Lot’s Baby Got Back. One other features a fake Johnny Money singing the lyrics to Aqua’s Barbie Woman.

(The unique Elvis video has since been taken down from YouTube attributable to a copyright declare from Common Music Group, however because of the magic of the Web, you possibly can hear it anyway.)

An excerpt copy of the “Elvis Sings Child Bought Again” video.

Clearly, since Elvis has been lifeless for 46 years (and Money for 20), neither man might have really sung the songs themselves. That is the place AI is available in. However as we’ll see, though generative AI might be wonderful, there’s nonetheless quite a lot of human expertise and energy concerned in crafting these musical mash-ups.

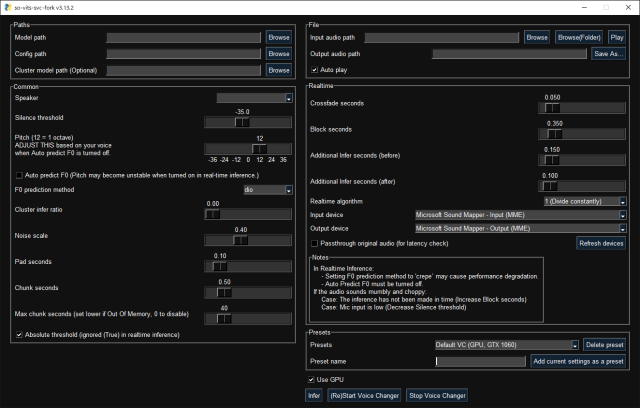

To determine how There I Ruined It does its magic, we first reached out to the channel’s creator, musician Dustin Ballard. Ballard’s response was low intimately, however he laid out the fundamental workflow. He makes use of an AI mannequin referred to as so-vits-svc to remodel his personal vocals he information into these of different artists. “It is presently not a really user-friendly course of (and the coaching itself is much more troublesome),” he advised Ars Technica in an e-mail, “however mainly after getting the skilled mannequin (primarily based on a big pattern of fresh audio references), then you possibly can add your individual vocal monitor, and it replaces it with the voice that you’ve got modeled. You then put that into your combine and construct the track round it.”

However let’s again up a second: What does “so-vits-svc” imply? The identify originates from a sequence of open supply applied sciences being chained collectively. The “so” half comes from “SoftVC” (VC for “voice conversion”), which breaks supply audio (a singer’s voice) into key elements that may be encoded and realized by a neural community. The “VITS” half is an acronym for “Variational Inference with adversarial studying for end-to-end Textual content-to-Speech,” coined on this 2021 paper. VITS takes data of the skilled vocal mannequin and generates the transformed voice output. And “SVC” means “singing voice conversion”—changing one singing voice to a different—versus changing somebody’s talking voice.

The latest There I Ruined It songs primarily use AI in a single regard: The AI mannequin depends on Ballard’s vocal efficiency, however it adjustments the timbre of his voice to that of another person, much like how Respeecher’s voice-to-voice know-how can transform one actor’s efficiency of Darth Vader into James Earl Jones’ voice. The remainder of the track comes from Ballard’s association in a traditional music app.

A sophisticated course of—in the mean time

Michael van Voorst

To get extra perception into the musical voice-cloning course of with so-vits-svc-fork (an altered model of the unique so-vits-svc), we tracked down Michael van Voorst, the creator of the Elvis voice AI mannequin that Ballard utilized in his Child Bought Again video. He walked us by the steps essential to create an AI mash-up.

“In an effort to create an correct reproduction of a voice, you begin off with creating an information set of fresh vocal audio samples from the individual you might be constructing a voice mannequin of,” mentioned van Voorst. “The audio samples should be of studio high quality for one of the best outcomes. If they’re of decrease high quality, it should replicate again into the vocal mannequin.”

Within the case of Elvis, van Voorst used vocal tracks from the singer’s well-known Aloha From Hawaii live performance in 1973 because the foundational materials to coach the voice mannequin. After cautious handbook screening, van Voorst extracted 36 minutes of high-quality audio, which he then divided into 10-second chunks for proper processing. “I listened rigorously for any interference, like band or viewers noise, and eliminated it from my information set,” he mentioned. Additionally, he tried to seize all kinds of vocal expressions: “The standard of the mannequin improves with extra and diversified samples.”

[ad_2]

Source link