[ad_1]

Aurich Lawson | Getty Pictures

A bit over three years in the past, simply earlier than COVID hit, we ran an extended piece on the tools and tricks that make Ars function without a physical office. Ars has spent a long time perfecting the way to get issues carried out as a distributed distant workforce, and because it seems, we had been much more lucky than we realized as a result of that distributed nature made working by way of the pandemic kind of a non-event for us. Whereas different firms had been scrambling to get work-from-home organized for his or her workers, we saved on trucking without having to do something completely different.

Nevertheless, there was a big change that Ars went by way of proper across the time that article was printed. January 2020 marked our transition away from bodily infrastructure and into an entirely cloud-based internet hosting setting. After years of nice service from the parents at Server Central (now Deft), the time had come for a leap into the clouds—and leap we did.

There have been a number of huge causes to make the change, however the ones that mattered most had been feature- and cost-related. Ars fiercely believes in working its personal tech stack, primarily as a result of we will iterate new options quicker that approach, and our neighborhood platform is exclusive amongst different Condé Nast manufacturers. So when the remainder of the corporate was both shifting to or already on Amazon Internet Providers (AWS), we might hop on the bandwagon and make the most of Condé’s enterprise pricing. That—mixed with not having to keep up bodily reserve infrastructure to soak up huge site visitors spikes and having the ability to depend on scaling—basically modified the equation for us.

Along with price, we additionally jumped on the likelihood to rearchitect how the Ars Technica web site and its parts had been structured and served. We had been utilizing a “digital non-public cloud” setup at our earlier internet hosting—it was a pile of devoted bodily servers working VMWare vSphere—however rolling every thing into AWS gave us the chance to reassess the positioning and undertake some stable reference structure.

Cloudy with an opportunity of infrastructure

And now, with that redesign having been purposeful and secure for a few years and some billion web page views (actually!), we need to invite you all behind the scenes to peek at how we maintain a serious web site like Ars on-line and purposeful. This text would be the first in a four-part collection on how Ars Technica works—we’ll look at each the essential expertise decisions that energy Ars and the software program with which we hook every thing collectively.

This primary piece, which we’re embarking on now, will have a look at the setup from a excessive degree after which give attention to the precise expertise parts—we’ll present the constructing blocks and the way these blocks are organized. One other week, we’ll observe up with a extra detailed have a look at the functions that run Ars and the way these functions match collectively throughout the infrastructure; after that, we’ll dig into the event setting and have a look at how Ars Tech Director Jason Marlin creates and deploys adjustments to the positioning.

Lastly, partially 4, we’ll take a little bit of a peek into the longer term. There are some adjustments that we’re pondering of constructing—the lure (and worth!) of 64-bit ARM choices is a strong factor—and partially 4, we’ll have a look at that stuff and discuss our upcoming plans emigrate to it.

Ars Technica: What we’re doing

However earlier than we have a look at what we need to do tomorrow, let’s have a look at what we’re doing right now. Gird your loins, expensive readers, and let’s dive in.

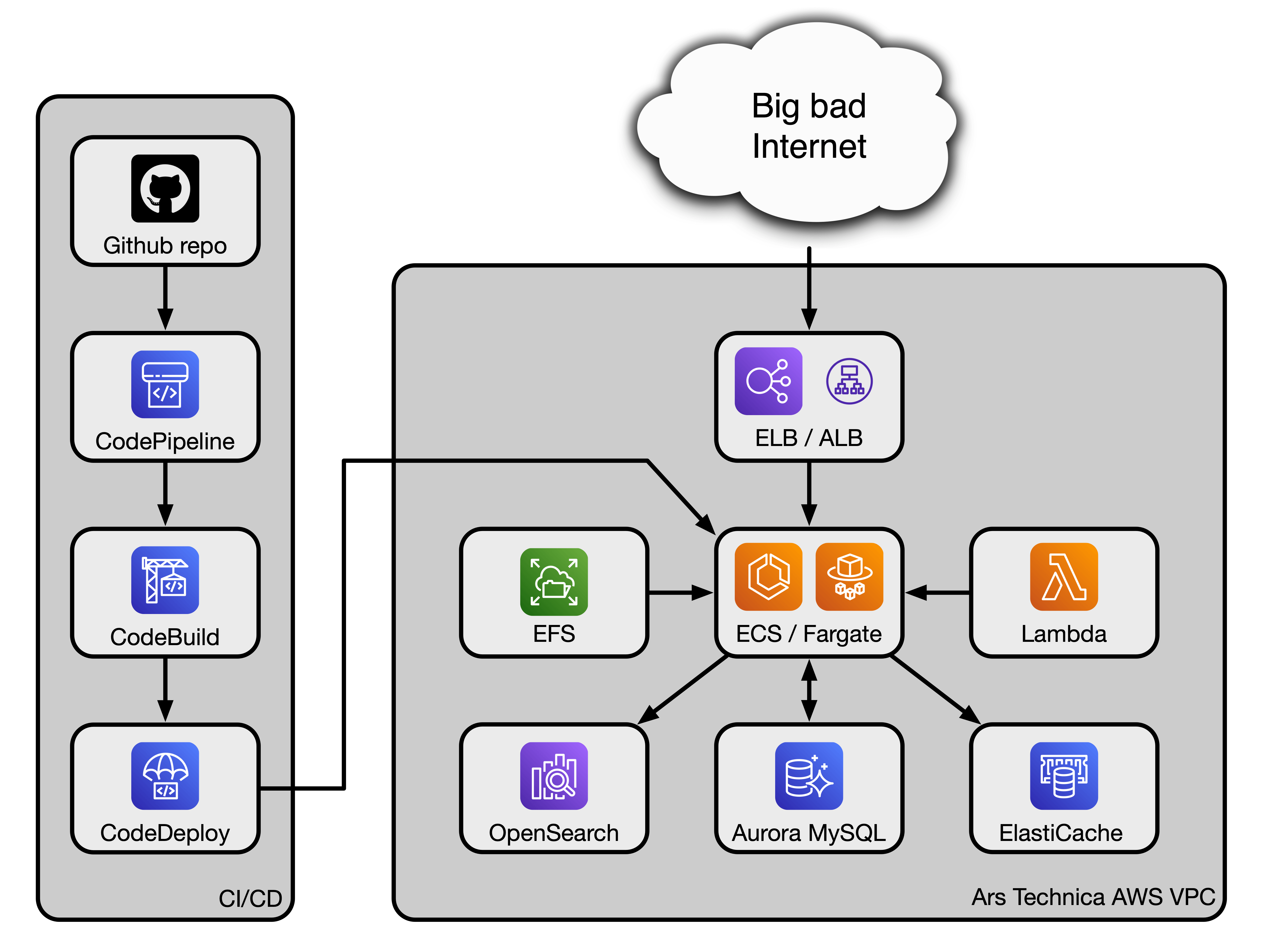

To begin, right here’s a block diagram of the precise AWS companies Ars makes use of. It’s a comparatively easy method to symbolize a fancy interlinked construction:

Lee Hutchinson

Ars leans on a number of items of the AWS tech stack. We’re depending on an Application Load Balancer (ALB) to first route incoming customer site visitors to the suitable Ars back-end service (extra on these companies partially 2). Downstream of the ALB, we use two companies known as Elastic Container Services (ECS) and Fargate together with one another to spin up Docker-like containers to do work. One other service, Lambda, is used to run cron jobs for the WordPress utility that varieties the core of the Ars web site (sure, Ars runs WordPress—we’ll get into that partially 2).

[ad_2]

Source link