[ad_1]

On Monday, a tweeted AI-generated picture suggesting a big explosion on the Pentagon led to temporary confusion, which included a reported small drop within the inventory market. It originated from a verified Twitter account named “Bloomberg Feed,” unaffiliated with the well-known Bloomberg media firm, and was shortly uncovered as a hoax. Nonetheless, earlier than it was debunked, massive accounts equivalent to Russia In the present day had already unfold the misinformation, The Washington Post reported.

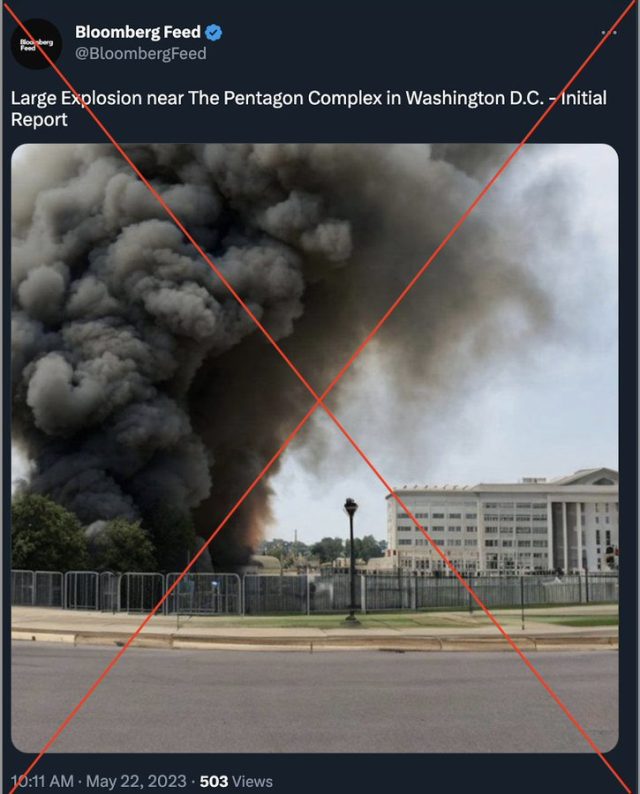

The faux picture depicted a big plume of black smoke alongside a constructing vaguely harking back to the Pentagon with the tweet “Massive Explosion close to The Pentagon Complicated in Washington D.C. — Inital Report.” Upon nearer inspection, native authorities confirmed that the picture was not an correct illustration of the Pentagon. Additionally, with blurry fence bars and constructing columns, it appears to be like like a reasonably sloppy AI-generated picture created by a mannequin like Stable Diffusion.

Earlier than Twitter suspended the false Bloomberg account, it had tweeted 224,000 instances and reached fewer than 1,000 followers, in line with the Put up, but it surely’s unclear who ran it or the motives behind sharing the false picture. Along with Bloomberg Feed, different accounts that shared the false report embody “Walter Bloomberg” and “Breaking Market Information,” each unaffiliated with the true Bloomberg group.

This incident underlines the potential threats AI-generated photos could current within the realm of unexpectedly shared social media—and a paid verification system on Twitter. In March, fake images of Donald Trump’s arrest created with Midjourney reached a large viewers. Whereas clearly marked as faux, they sparked fears of mistaking them for actual images resulting from their realism. That very same month, AI-generated photos of Pope Francis in a white coat fooled many who noticed them on social media.

The pope in puffy coats is one factor, however when somebody includes a authorities topic just like the headquarters of the US Division of Protection in a faux tweet, the implications might probably be extra extreme. Except for basic confusion on Twitter, the misleading tweet could have affected the inventory market. The Washington Put up says that the Dow Jones Industrial Index dropped 85 factors in 4 minutes after the tweet unfold however rebounded shortly.

A lot of the confusion over the false tweet could have been made attainable by adjustments at Twitter underneath its new proprietor, Elon Musk. Musk fired content material moderation groups shortly after his takeover and largely automated the account verification course of, transitioning it to a system the place anybody pays to have a blue examine mark. Critics argue that observe makes the platform extra prone to misinformation.

Whereas authorities simply picked out the explosion photograph as a faux resulting from inaccuracies, the presence of picture synthesis fashions like Midjourney and Secure Diffusion means it now not takes inventive talent to create convincing fakes, reducing the boundaries to entry and opening the door to probably automated misinformation machines. The benefit of making fakes, coupled with the viral nature of a platform like Twitter, signifies that false data can unfold quicker than it may be fact-checked.

However on this case, the picture didn’t must be top quality to make an impression. Sam Gregory, the chief director of the human rights group Witness, identified to The Washington Put up that when individuals wish to consider, they let down their guard and fail to look into the veracity of the knowledge earlier than sharing it. He described the false Pentagon picture as a “shallow faux” (versus a extra convincing “deepfake“).

“The way in which persons are uncovered to those shallow fakes, it doesn’t require one thing to look precisely like one thing else for it to get consideration,” he mentioned. “Individuals will readily take and share issues that don’t look precisely proper however really feel proper.”

[ad_2]

Source link