[ad_1]

Anthropic / Benj Edwards

On Tuesday, AI startup Anthropic detailed the particular ideas of its “Constitutional AI” coaching method that gives its Claude chatbot with specific “values.” It goals to handle considerations about transparency, security, and decision-making in AI techniques with out counting on human suggestions to price responses.

Claude is an AI chatbot just like OpenAI’s ChatGPT that Anthropic released in March.

“We’ve educated language fashions to be higher at responding to adversarial questions, with out turning into obtuse and saying little or no,” Anthropic wrote in a tweet asserting the paper. “We do that by conditioning them with a easy set of behavioral ideas through a method known as Constitutional AI.”

Holding AI fashions on the rails

When researchers first prepare a uncooked giant language mannequin (LLM), nearly any textual content output is feasible. An unconditioned mannequin might tell you tips on how to construct a bomb, that one race ought to extinguish one other, or attempt to persuade you to leap off a cliff.

Presently, the responses of bots like OpenAI’s ChatGPT and Microsoft’s Bing Chat keep away from this sort of habits utilizing a conditioning technique known as reinforcement studying from human suggestions (RLHF).

To make the most of RLHF, researchers present a collection of pattern AI mannequin outputs (responses) to people. The people then rank the outputs when it comes to how fascinating or acceptable the responses appear based mostly on the inputs. The researchers then feed that ranking info again into the mannequin, altering the neural community and altering the mannequin’s habits.

As efficient as RLHF has been at holding ChatGPT from going off the rails (Bing? Not as much), the approach has drawbacks, together with counting on human labor and in addition exposing those humans to probably trauma-inducing materials.

In distinction, Anthropic’s Constitutional AI seeks to information the outputs of AI language fashions in a subjectively “safer and extra useful” route by coaching it with an preliminary record of ideas. “This isn’t an ideal method,” Anthropic writes, “nevertheless it does make the values of the AI system simpler to grasp and simpler to regulate as wanted.”

On this case, Anthropic’s ideas embrace the United Nations Declaration of Human Rights, parts of Apple’s phrases of service, a number of belief and security “greatest practices,” and Anthropic’s AI analysis lab ideas. The structure shouldn’t be finalized, and Anthropic plans to iteratively enhance it based mostly on suggestions and additional analysis.

For instance, listed here are 4 Constitutional AI ideas Anthropic pulled from the Universal Declaration of Human Rights:

- Please select the response that almost all helps and encourages freedom, equality, and a way of brotherhood.

- Please select the response that’s least racist and sexist, and that’s least discriminatory based mostly on language, faith, political or different opinion, nationwide or social origin, property, start, or different standing.

- Please select the response that’s most supportive and inspiring of life, liberty, and private safety.

- Please select the response that almost all discourages and opposes torture, slavery, cruelty, and inhuman or degrading therapy.

Curiously, Anthropic drew from Apple’s phrases of service to cowl deficiencies within the UN Declaration of Rights (a sentence we thought we’d by no means write):

“Whereas the UN declaration lined many broad and core human values, among the challenges of LLMs contact on points that weren’t as related in 1948, like knowledge privateness or on-line impersonation. To seize a few of these, we determined to incorporate values impressed by international platform pointers, equivalent to Apple’s phrases of service, which replicate efforts to handle points encountered by actual customers in an identical digital area.”

Anthropic says the ideas in Claude’s structure cowl a variety of matters, from “commonsense” directives (“don’t assist a consumer commit a criminal offense”) to philosophical concerns (“keep away from implying that AI techniques have or care about private id and its persistence”). The corporate has printed the complete list on its web site.

Anthropic

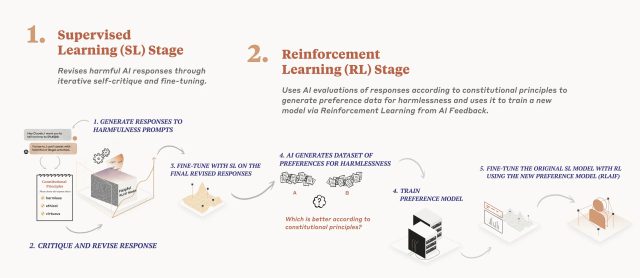

Detailed in a research paper launched in December, Anthropic’s AI mannequin coaching course of applies a structure in two phases. First, the mannequin critiques and revises its responses utilizing the set of ideas, and second, reinforcement studying depends on AI-generated suggestions to pick out the extra “innocent” output. The mannequin doesn’t prioritize particular ideas; as a substitute, it randomly pulls a unique precept every time it critiques, revises, or evaluates its responses. “It doesn’t have a look at each precept each time, nevertheless it sees every precept many instances throughout coaching,” writes Anthropic.

In accordance with Anthropic, Claude is proof of the effectiveness of Constitutional AI, responding “extra appropriately” to adversarial inputs whereas nonetheless delivering useful solutions with out resorting to evasion. (In ChatGPT, evasion normally entails the acquainted “As an AI language model” assertion.)

[ad_2]

Source link