[ad_1]

OpenAI / Secure Diffusion

On Tuesday, OpenAI announced new controls for ChatGPT customers that permit them to show off chat historical past, concurrently opting out of offering that dialog historical past as information for coaching AI fashions. Additionally, customers can now export chat historical past for native storage.

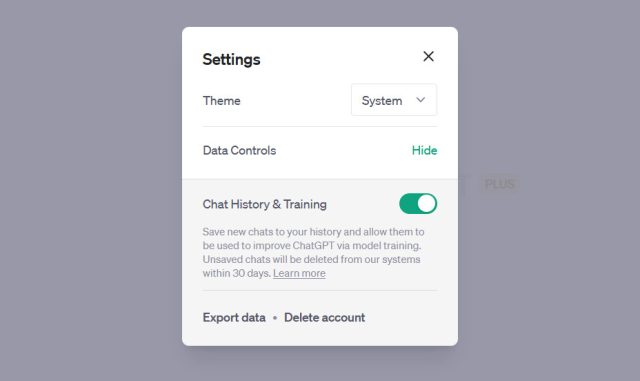

The brand new controls, which rolled out to all ChatGPT customers as we speak, may be present in ChatGPT settings. Conversations that start with the chat historical past disabled will not be used to coach and enhance the ChatGPT mannequin, nor will they seem within the historical past sidebar. OpenAI will retain the conversations internally for 30 days and assessment them “solely when wanted to watch for abuse” earlier than completely deleting them.

Nevertheless, customers who want to decide out of offering information to OpenAI for coaching will lose the dialog historical past function. It is unclear why customers can not use dialog historical past whereas concurrently opting out of mannequin coaching.

Beforehand, ChatGPT stored observe of conversations and used the dialog information to fine-tune its AI fashions. Customers might periodically clear their chat historical past on demand, however any dialog might nonetheless be used for fine-tuning. That posed a major privateness situation, particularly for sensitive data that may be shared by company staff, legal professionals, or docs utilizing ChatGPT.

Benj Edwards / Ars Technica

ChatGPT’s dialog historical past received OpenAI in hot water in March attributable to a bug that briefly uncovered some ChatGPT customers’ chat histories to different folks. That occasion attracted regulatory interest in Italy that has not yet been resolved. The brand new privacy-related ChatGPT options are probably associated to the resolution process.

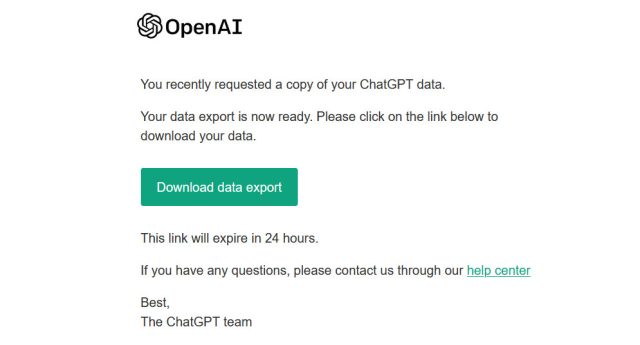

Additionally on Tuesday, OpenAI launched a brand new “export” possibility in ChatGPT settings that permits customers to export their ChatGPT information to recordsdata that may be saved regionally on a PC. We tried the export possibility in ChatGPT Settings (click on “Present” beside “Knowledge Controls”) and acquired an electronic mail containing a hyperlink to a compressed HTML file and a number of other JSON recordsdata that contained our saved dialog historical past with ChatGPT. The historical past solely prolonged again to the final time we cleared the dialog historical past.

Benj Edwards / Ars Technica

For professionals and enterprises that “want extra management over their information,” OpenAI additionally introduced that it’s engaged on a brand new “ChatGPT Enterprise” subscription that can decide customers out of mannequin coaching by default, in accordance with OpenAI’s Data Controls FAQ. OpenAI says the discharge date for ChatGPT Enterprise will happen “within the coming months.”

[ad_2]

Source link