[ad_1]

Ars Technica

To not be ignored of the push to integrate generative AI into search, on Wednesday DuckDuckGo introduced DuckAssist, an AI-powered factual abstract service powered by expertise from Anthropic and OpenAI. It’s accessible without cost at present as a large beta take a look at for customers of DuckDuckGo’s browser extensions and shopping apps. Being powered by an AI mannequin, the corporate admits that DuckAssist may make stuff up however hopes it should occur hardly ever.

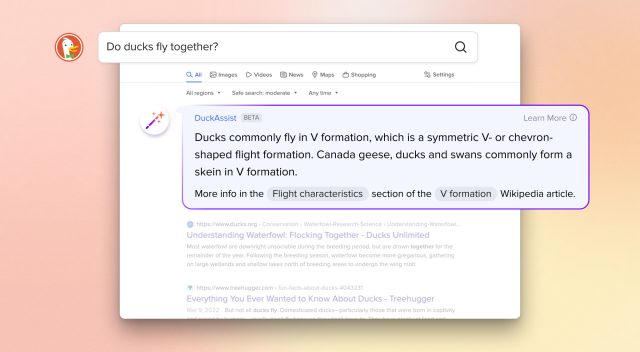

Here is the way it works: If a DuckDuckGo consumer searches a query that may be answered by Wikipedia, DuckAssist could seem and use AI pure language expertise to generate a short abstract of what it finds in Wikipedia, with supply hyperlinks listed beneath. The abstract seems above DuckDuckGo’s common search ends in a particular field.

The corporate positions DuckAssist as a brand new type of “Instantaneous Reply”—a function that forestalls customers from having to dig by way of net search outcomes to search out fast data on matters like information, maps, and climate. As a substitute, the search engine presents the Instantaneous Reply outcomes above the standard listing of internet sites.

DuckDuckGo

DuckDuckGo doesn’t say which massive language mannequin (LLM) or fashions it makes use of to generate DuckAssist, though some type of OpenAI API appears possible. Ars Technica has reached out to DuckDuckGo representatives for clarification. However DuckDuckGo CEO Gabriel Weinberg explains the way it makes use of sourcing in a company blog post:

DuckAssist solutions questions by scanning a particular set of sources—for now that’s often Wikipedia, and infrequently associated websites like Britannica—utilizing DuckDuckGo’s energetic indexing. As a result of we’re utilizing pure language expertise from OpenAI and Anthropic to summarize what we discover in Wikipedia, these solutions needs to be extra immediately conscious of your precise query than conventional search outcomes or different Instantaneous Solutions.

Since DuckDuckGo’s primary promoting level is privateness, the corporate says that DuckAssist is “nameless” and emphasizes that it doesn’t share search or shopping historical past with anybody. “We additionally preserve your search and shopping historical past nameless to our search content material companions,” Weinberg writes, “on this case, OpenAI and Anthropic, used for summarizing the Wikipedia sentences we determine.”

If DuckDuckGo is utilizing OpenAI’s GPT-3 or ChatGPT API, one may fear that the positioning may doubtlessly ship every consumer’s question to OpenAI each time it will get invoked. However studying between the strains, it seems that solely the Wikipedia article (or excerpt of 1) will get despatched to OpenAI for summarization, not the consumer’s search itself. Now we have reached out to DuckDuckGo for clarification on this level as effectively.

DuckDuckGo calls DuckAssist “the primary in a sequence of generative AI-assisted options we hope to roll out within the coming months.” If the launch goes effectively—and no one breaks it with adversarial prompts—DuckDuckGo plans to roll out the function to all search customers “within the coming weeks.”

DuckDuckGo: Threat of hallucinations “significantly diminished”

As we have previously covered on Ars, LLMs generally tend to provide convincing faulty outcomes, which AI researchers name “hallucinations” as a term of art within the AI discipline. Hallucinations will be arduous to identify until the fabric being referenced, they usually come about partially as a result of GPT-style LLMs from OpenAI don’t distinguish between truth and fiction of their datasets. Moreover, the fashions could make false inferences primarily based on information that’s in any other case correct.

On this level, DuckDuckGo hopes to keep away from hallucinations by leaning closely on Wikipedia as a supply: “by asking DuckAssist to solely summarize data from Wikipedia and associated sources,” Weinberg writes, “the chance that it’s going to “hallucinate”—that’s, simply make one thing up—is significantly diminished.”

Whereas counting on a high quality supply of knowledge could scale back errors from false data within the AI’s dataset, it could not scale back false inferences. And DuckDuckGo places the burden of fact-checking on the consumer, offering a supply hyperlink beneath the AI-generated end result that can be utilized to look at its accuracy. But it surely will not be good, and CEO Weinberg admits it: “Nonetheless, DuckAssist received’t generate correct solutions the entire time. We absolutely count on it to make errors.”

As extra companies deploy LLM expertise that may simply misinform, it could take a while and widespread use earlier than firms and clients resolve what degree of hallucination is tolerable in an AI-powered product that’s designed to factually inform individuals.

[ad_2]

Source link