[ad_1]

Ars Technica

On Thursday, researchers from Google introduced a brand new generative AI mannequin known as MusicLM that may create 24 KHz musical audio from textual content descriptions, equivalent to “a chilled violin melody backed by a distorted guitar riff.” It could actually additionally rework a hummed melody into a special musical fashion and output music for a number of minutes.

MusicLM makes use of an AI mannequin skilled on what Google calls “a giant dataset of unlabeled music,” together with captions from MusicCaps, a brand new dataset composed of 5,521 music-text pairs. MusicCaps will get its textual content descriptions from human specialists and its matching audio clips from Google’s AudioSet, a group of over 2 million labeled 10-second sound clips pulled from YouTube movies.

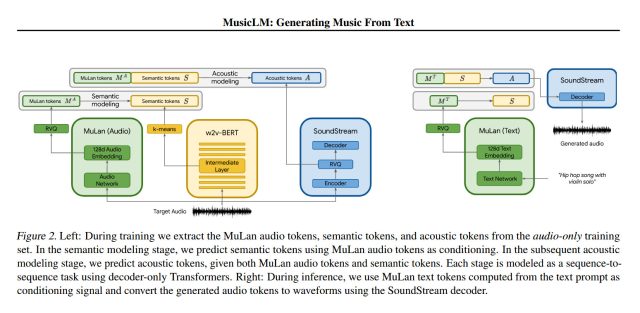

Usually talking, MusicLM works in two major components: first, it takes a sequence of audio tokens (items of sound) and maps them to semantic tokens (phrases that characterize which means) in captions for coaching. The second half receives person captions and/or enter audio and generates acoustic tokens (items of sound that make up the ensuing tune output). The system depends on an earlier AI mannequin known as AudioLM (launched by Google in September) together with different elements equivalent to SoundStream and MuLan.

Google claims that MusicLM outperforms previous AI music turbines in audio high quality and adherence to textual content descriptions. On the MusicLM demonstration page, Google gives quite a few examples of the AI mannequin in motion, creating audio from “wealthy captions” that describe the texture of the music, and even vocals (which to date are gibberish). Right here is an instance of a wealthy caption that they supply:

Gradual tempo, bass-and-drums-led reggae tune. Sustained electrical guitar. Excessive-pitched bongos with ringing tones. Vocals are relaxed with a laid-back really feel, very expressive.

Google additionally reveals off MusicLM’s “lengthy technology” (creating five-minute music clips from a easy immediate), “story mode” (which takes a sequence of textual content prompts and turns it right into a morphing sequence of musical tunes), “textual content and melody conditioning” (which takes a human buzzing or whistling audio enter and adjustments it to match the fashion specified by a immediate), and producing music that matches the temper of picture captions.

Google Analysis

Additional down the instance web page, Google dives into MusicLM’s capability to re-create explicit devices (e.g., flute, cello, guitar), completely different musical genres, numerous musician expertise ranges, locations (escaping jail, gymnasium), time intervals (a membership within the Fifties), and extra.

AI-generated music is not a brand new concept by any stretch, however AI music technology strategies of earlier a long time typically created musical notation that was later performed by hand or via a synthesizer, whereas MusicLM generates the uncooked audio frequencies of the music. Additionally, in December, we coated Riffusion, a pastime AI challenge which may equally create music from textual content descriptions, however not at excessive constancy. Google references Riffusion in its MusicLM academic paper, saying that MusicLM surpasses it in high quality.

Within the MusicLM paper, its creators define potential impacts of MusicLM, together with “potential misappropriation of inventive content material” (i.e., copyright points), potential biases for cultures underrepresented within the coaching knowledge, and potential cultural appropriation points. In consequence, Google emphasizes the necessity for extra work on tackling these dangers, they usually’re holding again the code: “We have now no plans to launch fashions at this level.”

Google’s researchers are already wanting forward towards future enhancements: “Future work might deal with lyrics technology, together with enchancment of textual content conditioning and vocal high quality. One other facet is the modeling of high-level tune construction like introduction, verse, and refrain. Modeling the music at the next pattern charge is a further objective.”

It is most likely not an excessive amount of of a stretch to counsel that AI researchers will proceed bettering music technology know-how till anybody can create studio-quality music in any fashion simply by describing it—though nobody can but predict precisely when that objective will likely be attained or how precisely it would influence the music trade. Keep tuned for additional developments.

[ad_2]

Source link