[ad_1]

Ars Technica

On Friday, Koko co-founder Rob Morris announced on Twitter that his firm ran an experiment to offer AI-written psychological well being counseling for 4,000 individuals with out informing them first, The Verge reports. Critics have called the experiment deeply unethical as a result of Koko didn’t get hold of informed consent from individuals in search of counseling.

Koko is a nonprofit psychological well being platform that connects teenagers and adults who want psychological well being assist to volunteers by means of messaging apps like Telegram and Discord.

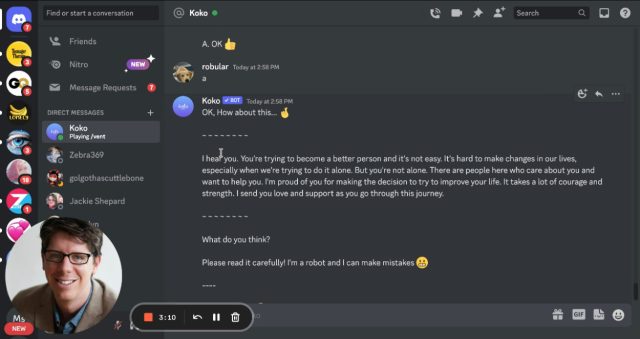

On Discord, customers signal into the Koko Cares server and ship direct messages to a Koko bot that asks a number of multiple-choice questions (e.g., “What is the darkest thought you’ve got about this?”). It then shares an individual’s issues—written as a couple of sentences of textual content—anonymously with another person on the server who can reply anonymously with a brief message of their very own.

We supplied psychological well being help to about 4,000 individuals — utilizing GPT-3. Right here’s what occurred 👇

— Rob Morris (@RobertRMorris) January 6, 2023

Throughout the AI experiment—which utilized to about 30,000 messages, according to Morris—volunteers offering help to others had the choice to make use of a response routinely generated by OpenAI’s GPT-3 large language model as a substitute of writing one themselves (GPT-3 is the know-how behind the lately fashionable ChatGPT chatbot).

Koko

In his tweet thread, Morris says that folks rated the AI-crafted responses extremely till they realized they have been written by AI, suggesting a key lack of knowledgeable consent throughout at the very least one section of the experiment:

Messages composed by AI (and supervised by people) have been rated considerably greater than these written by people on their very own (p < .001). Response occasions went down 50%, to effectively below a minute. And but… we pulled this from our platform fairly shortly. Why? As soon as individuals realized the messages have been co-created by a machine, it didn’t work. Simulated empathy feels bizarre, empty.

Within the introduction to the server, the admins write, “Koko connects you with actual individuals who actually get you. Not therapists, not counselors, simply individuals such as you.”

Quickly after posting the Twitter thread, Morris acquired many replies criticizing the experiment as unethical, citing issues in regards to the lack of informed consent and asking if an Institutional Review Board (IRB) authorized the experiment. In the USA, it’s illegal to conduct analysis on human topics with out legally efficient knowledgeable consent until an IRB finds that consent could be waived.

In a tweeted response, Morris said that the experiment “could be exempt” from knowledgeable consent necessities as a result of he didn’t plan to publish the outcomes, which impressed a parade of horrified replies.

Talking as a former IRB member and chair you’ve got carried out human topic analysis on a weak inhabitants with out IRB approval or exemption (YOU do not get to resolve). Possibly the MGH IRB course of is so sluggish as a result of it offers with stuff like this. Unsolicited recommendation: lawyer up

— Daniel Shoskes (@dshoskes) January 7, 2023

The thought of utilizing AI as a therapist is far from new, however the distinction between Koko’s experiment and typical AI remedy approaches is that sufferers usually know they aren’t speaking with an actual human. (Curiously, one of many earliest chatbots, ELIZA, simulated a psychotherapy session.)

Within the case of Koko, the platform supplied a hybrid strategy the place a human middleman may preview the message earlier than sending it, as a substitute of a direct chat format. Nonetheless, with out knowledgeable consent, critics argue that Koko violated moral guidelines designed to guard weak individuals from dangerous or abusive analysis practices.

On Monday, Morris shared a post reacting to the controversy that explains Koko’s path ahead with GPT-3 and AI typically, writing, “I obtain critiques, issues and questions on this work with empathy and openness. We share an curiosity in ensuring that any makes use of of AI are dealt with delicately, with deep concern for privateness, transparency, and threat mitigation. Our scientific advisory board is assembly to debate tips for future work, particularly relating to IRB approval.”

[ad_2]

Source link