[ad_1]

When must you use bandit exams, and when is A/B/n testing greatest?

Although there are some sturdy proponents (and opponents) of bandit testing, there are specific use instances the place bandit testing could also be optimum. Query is, when?

First, let’s dive into bandit testing and discuss a bit in regards to the historical past of the N-armed bandit drawback.

What’s the multi-armed bandit drawback?

The multi-armed bandit problem is a traditional thought experiment.

In a state of affairs the place a set, finite quantity of sources have to be divided between conflicting (various) choices as a way to maximize every get together’s anticipated acquire.

Think about this situation:

You’re in a on line casino. There are lots of totally different slot machines (often called ‘one-armed bandits,’ as they’re identified for robbing you), every with a lever (and arm, if you’ll). You assume that some slot machines payout extra continuously than others do, so that you’d like to maximise this.

You solely have a restricted quantity of sources—when you pull one arm, then you definately’re not pulling another arm. After all, the purpose is to stroll out of the on line casino with probably the most cash. Query is, how do you study which slot machine is the very best and get probably the most cash within the shortest period of time?

When you knew which lever would pay out probably the most, you’ll simply pull that lever all day. With regard to optimization, the functions of this drawback are apparent. As Andrew Anderson mentioned in an Adobe article:

Andrew Anderson:

“In a really perfect world, you’ll already know all attainable values, have the ability to intrinsically name the worth of every motion, after which apply all of your sources in direction of that one motion that causes you the best return (a grasping motion). Sadly, that isn’t the world we stay in, and the issue lies once we permit ourselves that delusion. The issue is that we have no idea the worth of every final result, and as such want to maximise our skill of that discovery.”

What’s bandit testing?

Bandit testing is a testing method that makes use of algorithms to optimize your conversion goal whereas the experiment continues to be operating moderately than after it has completed.

The sensible variations between A/B testing and bandit testing

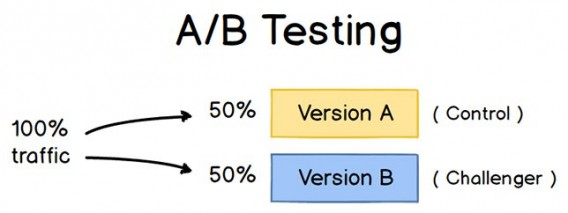

A/B split testing is the present default for optimization, and you already know what it seems to be like:

You ship 50% of your visitors to the management and 50% of your visitors to variation, run the take a look at ‘til it’s legitimate, after which determine whether or not to implement the successful variation.

Discover-exploit

In statistical terms, A/B testing consists of a brief interval of pure exploration, the place you’re randomly assigning equal numbers of customers to Model A and Model B. It then jumps into a protracted interval of pure exploitation, the place you ship 100% of your customers to the extra profitable model of your web site.

In Bandit Algorithms for Website Optimization, the creator outlines two issues with this:

- It jumps discretely from exploration to exploitation, whenever you may have the ability to transition extra easily.

- Through the exploratory section (the take a look at), it wastes sources exploring inferior choices as a way to collect as a lot information as attainable.

In essence, the distinction between bandit testing and a/b/n testing is how they take care of the explore-exploit dilemma.

As I discussed, A/B testing explores first then exploits (retains solely winner).

Bandit testing tries to resolve the explore-exploit drawback another way. As an alternative of two distinct intervals of pure exploration and pure exploitation, bandit exams are adaptive, and concurrently embody exploration and exploitation.

So, bandit algorithms attempt to reduce alternative prices and reduce remorse (the distinction between your precise payoff and the payoff you’ll have collected had you performed the optimum—greatest—choices at each alternative). Matt Gershoff from Conductrics wrote an incredible weblog put up discussing bandits. Right here’s what he had to say:

Matt Gershoff:

“Some prefer to name it incomes whereas studying. It’s essential to each study as a way to determine what works and what doesn’t, however to earn; you reap the benefits of what you may have realized. That is what I actually like in regards to the Bandit approach of trying on the drawback, it highlights that accumulating information has an actual value, when it comes to alternatives misplaced.”

Chris Stucchio from VWO gives the next rationalization of bandits:

Chris Stucchio:

“Anytime you might be confronted with the issue of each exploring and exploiting a search area, you may have a bandit drawback. Any technique of fixing that drawback is a bandit algorithm—this consists of A/B testing. The purpose in any bandit drawback is to keep away from sending visitors to the decrease performing variations. Just about each bandit algorithm you examine on the web (major exceptions being adversarial bandit, my jacobi diffusion bandit, and a few soar course of bandits) makes a number of mathematical assumptions:

a) Conversion charges don’t change over time.

b) Displaying a variation and observing a conversion occur instantaneously. This implies the next timeline is unimaginable: 12:00 Customer A sees Variation 1. 12:01 customer B sees Variation 2. 12:02 Customer A converts.

c) Samples within the bandit algorithm are impartial of one another.

A/B testing is a reasonably strong algorithm when these assumptions are violated. A/B testing doesn’t care a lot if conversion charges change over the take a look at interval, i.e. if Monday is totally different from Saturday, simply be sure your take a look at has the identical variety of Mondays and Saturdays and you might be effective. Equally, so long as your take a look at interval is lengthy sufficient to seize conversions, once more—it’s all good.”

In essence, there shouldn’t be an ‘A/B testing vs. bandit testing, which is best?’ debate, as a result of it’s comparing apples to oranges. These two methodologies serve two totally different wants.

Advantages of bandit testing

The primary query to reply, earlier than answering when to make use of bandit exams, is why to make use of bandit exams. What are the benefits?

Once they had been nonetheless accessible (previous to August 2019), Google Content Experiments used bandit algorithms. They used to reason that the benefits of bandits are plentiful:

They’re extra environment friendly as a result of they transfer visitors in direction of successful variations step by step, as a substitute of forcing you to attend for a “last reply” on the finish of an experiment. They’re sooner as a result of samples that may have gone to clearly inferior variations may be assigned to potential winners. The additional information collected on the high-performing variations might help separate the “good” arms from the “greatest” ones extra shortly.

Matt Gershoff outlined 3 causes it’s best to care about bandits in a post on his company blog (paraphrased):

- Earn when you study. Knowledge assortment is a price, and bandit method no less than lets us think about these prices whereas operating optimization initiatives.

- Automation. Bandits are the pure solution to automate the choice optimization with machine studying, particularly when making use of person goal—since appropriate A/B exams are far more difficult in that state of affairs.

- A altering world. Matt explains that by letting the bandit technique all the time go away some probability to pick the poorer performing choice, you give it an opportunity to ‘rethink’ the choice effectiveness. It supplies a working framework for swapping out low performing choices with contemporary choices, in a steady course of.

In essence, folks like bandit algorithms due to the sleek transition between exploration and exploitation, the pace, and the automation.

A number of flavors of bandit methodology

There are tons of various bandit strategies. Like numerous debates round testing, numerous that is of secondary significance—misses the forest for the bushes.

With out getting too caught up within the nuances between strategies, I’ll clarify the best (and most typical) technique: the epsilon-greedy algorithm. Figuring out this may can help you perceive the broad strokes of what bandit algorithms are.

Epsilon-greedy technique

One technique that has been proven to carry out effectively time after time in sensible issues is the epsilon-greedy technique. We all the time preserve observe of the variety of pulls of the lever and the quantity of rewards we’ve got obtained from that lever. 10% of the time, we select a lever at random. The opposite 90% of the time, we select the lever that has the very best expectation of rewards. (source)

Okay, so what do I imply by grasping? In laptop science, a grasping algorithm is one which all the time takes the motion that appears greatest at that second. So, an epsilon-greedy algorithm is sort of a totally grasping algorithm—more often than not it picks the choice that is smart at that second.

Nevertheless, each occasionally, an epsilon-greedy algorithm chooses to discover the opposite accessible choices.

So epsilon-greedy is a continuing play between:

- Discover: randomly choose motion sure p.c of time (say 20%);

- Exploit (play grasping): decide the present greatest p.c of time (say 80%).

This picture (and the article from which it came) explains epsilon-greedy very well:

There are some execs and cons to the epsilon-greedy technique. Professionals embody:

- It’s easy and straightforward to implement.

- It’s normally efficient.

- It’s not as affected by seasonality.

Some cons:

- It doesn’t use a measure of variance.

- Must you lower exploration over time?

What about different algorithms?

Like I mentioned, a bunch of different bandit strategies attempt to remedy these cons in numerous methods. Listed here are a couple of:

Might write 15,000 phrases on this, however as a substitute, simply know the underside line is that every one the opposite strategies are merely attempting to greatest stability exploration (studying) with exploitation (taking motion based mostly on present greatest info).

Matt Gershoff sums it up very well:

Matt Gershoff:

“Sadly, just like the Bayesian vs Frequentist arguments in AB testing, it seems to be like that is one other space the place the analytics neighborhood may get lead astray into shedding the forest for the bushes. At Conductrics, we make use of and take a look at a number of totally different bandit approaches. Within the digital surroundings, we need to be certain that no matter method is used, that it’s strong to nonstationary information. That signifies that even when we use Thompson sampling, a UCB technique, or Boltzmann method, we all the time prefer to mix in a little bit of the epsilon-greedy method, to make sure that the system doesn’t early converge to a sub-optimal answer. By choosing a random subset, we are also ready to make use of this information to run a meta A/B Check, that lets the consumer see the elevate related to utilizing bandits + focusing on.”

Be aware: if you wish to nerd out on the totally different bandit algorithms, this can be a good paper to check out.

When to make use of bandit exams as a substitute of A/B/n exams?

There’s a excessive degree reply, after which there are some particular circumstances through which bandit works effectively. For the excessive degree reply, in case you have a analysis query the place you need to perceive the impact of a remedy and have some certainty round your estimates, a typical A/B take a look at experiment shall be greatest.

In accordance with Matt Gershoff, “If then again, you truly care about optimization, moderately than understanding, bandits are sometimes the best way to go.”

Particularly, bandit algorithms are inclined to work effectively for actually quick exams—and paradoxically—actually lengthy exams (ongoing exams). I’ll break up up the use instances into these two teams.

1. Brief exams

Bandit algorithms are conducive for brief exams for clear causes—when you had been to run a traditional A/B take a look at as a substitute, you’d not even have the ability to benefit from the interval of pure exploitation (after the experiment ended). As an alternative, bandit algorithms can help you regulate in actual time and ship extra visitors, extra shortly, to the higher variation. As Chris Stucchio says, “At any time when you may have a small period of time for each exploration and exploitation, use a bandit algorithm.”

Listed here are particular use instances inside quick exams:

a. Headlines

Headlines are the proper use case for bandit algorithms. Why would you run a traditional A/B take a look at on a headline if, by the point you study which variation is greatest, the time the place the reply is relevant is over? Information has a brief half-life, and bandit algorithms decide shortly which is the higher headline.

Chris Stucchio used an analogous instance on his Bayesian Bandits post. Think about you’re a newspaper editor. It’s not a gradual day; a homicide sufferer has been discovered. Your reporter has to determine between two headlines, “Homicide sufferer present in grownup leisure venue” and “Headless Body in Topless Bar.” As Chris says, geeks now rule the world—that is now normally an algorithmic choice, not an editorial one. (Additionally, that is probably how websites like Upworthy and BuzzFeed do it).

b. Brief time period campaigns and promotions

Just like headlines, there’s an enormous alternative value when you select to A/B take a look at. In case your marketing campaign is every week lengthy, you don’t need to spend the week exploring with 50% of your visitors, as a result of when you study something, it’s too late to use the best choice.

That is very true with holidays and seasonal promotions. Stephen Pavlovich from Conversion.com recommends bandits for quick time period campaigns:

Stephen Pavlovich:

“A/B testing isn’t that helpful for short-term campaigns. When you’re operating exams on an ecommerce web site for Black Friday, an A/B take a look at isn’t that sensible—you may solely be assured within the end result on the finish of the day. As an alternative, a MAB will drive extra visitors to the better-performing variation—and that in flip can enhance income.”

2. Lengthy-term testing

Oddly sufficient, bandit algorithms are efficient in long run (or ongoing) testing. As Stephen Pavlovich put it:

Stephen Pavlovich:

“A/B exams additionally fall quick for ongoing exams—particularly, the place the take a look at is consistently evolving. Suppose you’re operating a information web site, and also you need to decide the very best order to show the highest 5 sports activities tales in. A MAB framework can can help you set it and neglect. Actually, Yahoo! truly printed a paper on how they used MAB for content material suggestion, again in 2009.”

There are a couple of totally different use instances inside ongoing testing as effectively:

a. “Set it and neglect it” (automation for scale)

As a result of bandits routinely shift visitors to greater performing (on the time) variations, you may have a low-risk answer for steady optimization. Right here’s how Matt Gershoff put it:

Matt Gershoff:

“Bandits can be utilized for ‘automation for scale.’ Say you have many parts to repeatedly optimize, the bandit method provides you a framework to partially automate the optimization course of for low threat, excessive transaction issues which might be too expensive to have costly analysts pour over”

Ton Wesseling additionally mentions that bandits may be nice for testing on excessive visitors pages after studying from A/B exams:

Ton Wesseling:

“Simply give some variations to a bandit and let it run. Preferable you utilize a contextual bandit. Everyone knows the proper web page for everybody doesn’t exist, it differs per phase. The bandit will present the very best variation to every phase.”

b. Concentrating on

One other long run use of bandit algorithms is targeting—which is particularly pertinent in relation to serving specific ads and content to user sets. As Matt Gershoff put it:

Matt Gershoff:

“Actually, true optimization is extra of an task drawback than a testing drawback. We need to study the principles that assign the very best experiences to every buyer. We will remedy this utilizing what’s often called a contextual bandit (or, alternatively, a reinforcement learning agent with function approximation). The bandit is beneficial right here as a result of some varieties of customers could also be extra frequent than others. The bandit can reap the benefits of this, by making use of the realized focusing on guidelines sooner for extra frequent customers, whereas persevering with to study (experiment) on the principles for the much less frequent person varieties.”

Ton additionally talked about which you could study from contextual bandits:

Ton Wesseling:

“By placing your A/B take a look at in a contextual bandit with segments you bought from information analysis, you can find out if sure content material is essential for sure segments and never for others. That’s very beneficial—you need to use these insights to optimize the shopper journey for each phase. This may be achieved with trying into segments after an A/B take a look at too, nevertheless it’s much less time consuming to let the bandit do the work.”

Additional studying: A Contextual-Bandit Approach to Personalized News Article Recommendation

c. Mixing Optimization with Attribution

Lastly, bandits can be utilized to optimize issues throughout a number of contact factors. This communication between bandits ensures that they’re working collectively to optimize the worldwide drawback and maximize outcomes. Matt Gershoff provides the next instance:

Matt Gershoff:

“You may consider Reinforcement Studying as a number of bandit issues that talk with one another to make sure that they’re all working collectively to seek out the very best mixtures throughout the entire contact factors. For instance, we’ve got had purchasers that positioned a product supply bandit on their web site’s house web page and one of their name heart’s automated cellphone system. Based mostly on the gross sales conversions on the name heart, each bandits communicated native outcomes to make sure that they’re working in concord optimize the worldwide drawback.”

Caveats: potential drawbacks of bandit testing

Though there are tons of weblog posts with slightly sensationalist titles, there are some things to think about earlier than leaping on the bandit bandwagon.

First, multi-armed-bandits may be tough to implement. As Shana Carp mentioned on a Growthhackers.com thread:

MAB is way far more computationally tough to drag off until you already know what you might be doing. The useful value of doing it’s principally the price of three engineers—a knowledge scientist, one regular man to place into code and scale the code of what the information scientist says, and one dev-ops particular person. (Although the final two might in all probability play double in your crew.) It’s actually uncommon to seek out information scientists who program extraordinarily effectively.

The second factor, although I’m unsure it’s an enormous problem, is the time it takes to achieve significance. As Paras Chopra pointed out, “There’s an inverse relationship (and therefore a tradeoff) between how quickly you see statistical significance and common conversion fee through the marketing campaign.”

Chris Stucchio additionally outlined what he called the Saturday/Tuesday problem. Mainly, think about you’re operating a take a look at on two headlines:

- Completely satisfied Monday! Click on right here to purchase now.

- What a gorgeous day! Click on right here to purchase now.

Then suppose you run a bandit algorithm, beginning on Monday:

- Monday: 1,000 shows for “Completely satisfied Monday,” 200 conversions. 1,000 shows for “Stunning Day,” 100 conversions.

- Tuesday: 1,900 shows for “Completely satisfied Monday,” 100 conversions. 100 shows for “Stunning Day,” 10 conversions.

- Wednesday: 1,900 shows for “Completely satisfied Monday,” 100 conversions. 100 shows for “Stunning Day,” 10 conversions.

- Thursday: 1,900 shows for “Completely satisfied Monday,” 100 conversions. 100 shows for “Stunning Day,” 10 conversions.

Though “Completely satisfied Monday” is inferior (20% conversion fee on Monday and 5% remainder of the week = 7.1% conversion fee), the bandit algorithm has nearly converged to “Completely satisfied Monday, ” so the samples proven “Stunning Day” could be very low. It takes numerous information to appropriate this.

(Be aware: A/B/n exams have the identical drawback non-stationary data. That’s why you should test for full weeks.)

Chris additionally talked about that bandits shouldn’t be used for e mail blasts:

Chris Stucchio:

“One crucial word—e mail blasts are a reasonably poor use case for normal bandits. The issue is that with e mail, conversions can occur lengthy after a show happens—you may ship out hundreds of emails earlier than you see the primary conversion. This violates the idea underlying most bandit algorithms.”

Conclusion

Andrew Anderson summed it up very well in a Quora answer:

Andrew Anderson:

“Normally bandit-ased optimization can produce far superior outcomes to common A/B testing, nevertheless it additionally highlights organizational issues extra. You might be handing over all choice making to a system. A system is simply as sturdy as its weakest factors and the weakest factors are going to be the biases that dictate the inputs to the system and the shortcoming to know or hand over all choice making to the system. In case your group can deal with this then it’s a nice transfer, but when it may well’t, then you definately usually tend to trigger extra issues then they’re worse. Like all good instrument, you utilize it for the conditions the place it may well present probably the most worth, and never in ones the place it doesn’t. Each methods have their place and over reliance on anybody results in large limits within the final result generated to your group.”

As talked about above, the conditions the place bandit testing appears to flourish are:

- Headlines and short-term campaigns;

- Automation for scale;

- Concentrating on;

- Mixing optimization with attribution.

Any questions, simply ask within the feedback!

[ad_2]

Source link