[ad_1]

Meta AI

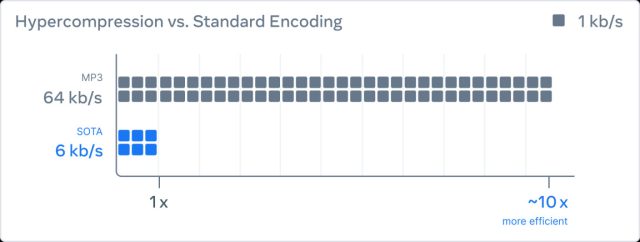

Final week, Meta announced an AI-powered audio compression technique referred to as “EnCodec” that may reportedly compress audio 10 occasions smaller than the MP3 format at 64kbps with no loss in high quality. Meta says this system may dramatically enhance the sound high quality of speech on low-bandwidth connections, akin to cellphone calls in areas with spotty service. The method additionally works for music.

Meta debuted the know-how on October 25 in a paper titled “High Fidelity Neural Audio Compression,” authored by Meta AI researchers Alexandre Défossez, Jade Copet, Gabriel Synnaeve, and Yossi Adi. Meta additionally summarized the analysis on its blog dedicated to EnCodec.

Meta AI

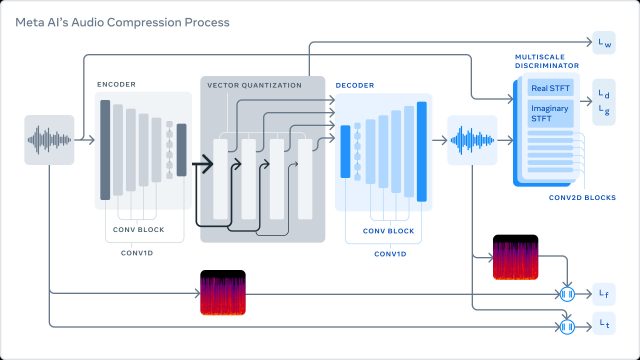

Meta describes its technique as a three-part system skilled to compress audio to a desired goal dimension. First, the encoder transforms uncompressed information right into a decrease body price “latent area” illustration. The “quantizer” then compresses the illustration to the goal dimension whereas holding observe of crucial info that can later be used to rebuild the unique sign. (This compressed sign is what will get despatched by means of a community or saved to disk.) Lastly, the decoder turns the compressed information again into audio in actual time utilizing a neural community on a single CPU.

Meta AI

Meta’s use of discriminators proves key to creating a technique for compressing the audio as a lot as attainable with out dropping key components of a sign that make it distinctive and recognizable:

“The important thing to lossy compression is to establish modifications that won’t be perceivable by people, as good reconstruction is inconceivable at low bit charges. To take action, we use discriminators to enhance the perceptual high quality of the generated samples. This creates a cat-and-mouse sport the place the discriminator’s job is to distinguish between actual samples and reconstructed samples. The compression mannequin makes an attempt to generate samples to idiot the discriminators by pushing the reconstructed samples to be extra perceptually much like the unique samples.”

It is price noting that utilizing a neural community for audio compression and decompression is far from new—particularly for speech compression—however Meta’s researchers declare they’re the primary group to use the know-how to 48 kHz stereo audio (barely higher than CD’s 44.1 kHz sampling price), which is typical for music recordsdata distributed on the Web.

As for functions, Meta says this AI-powered “hypercompression of audio” may help “quicker, better-quality calls” in unhealthy community circumstances. And, after all, being Meta, the researchers additionally point out EnCodec’s metaverse implications, saying the know-how may finally ship “wealthy metaverse experiences with out requiring main bandwidth enhancements.”

Past that, possibly we’ll additionally get actually small music audio recordsdata out of it sometime. For now, Meta’s new tech stays within the analysis section, nevertheless it factors towards a future the place high-quality audio can use much less bandwidth, which might be nice information for cell broadband suppliers with overburdened networks from streaming media.

[ad_2]

Source link