[ad_1]

Aurich Lawson / Getty Photographs

Matrix multiplication is at the heart of many machine studying breakthroughs, and it simply obtained quicker—twice. Final week, DeepMind announced it found a extra environment friendly technique to carry out matrix multiplication, conquering a 50-year-old report. This week, two Austrian researchers at Johannes Kepler College Linz claim they’ve bested that new report by one step.

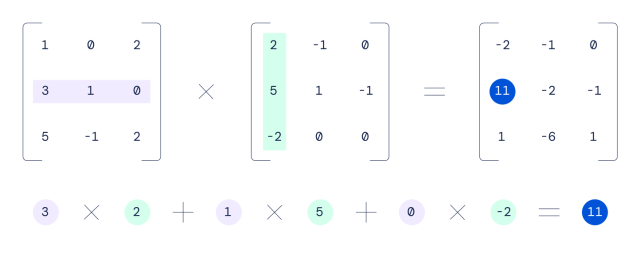

Matrix multiplication, which involves multiplying two rectangular arrays of numbers, is commonly discovered on the coronary heart of speech recognition, picture recognition, smartphone picture processing, compression, and producing pc graphics. Graphics processing items (GPUs) are notably good at performing matrix multiplication because of their massively parallel nature. They’ll cube a giant matrix math drawback into many items and assault elements of it concurrently with a particular algorithm.

In 1969, a German mathematician named Volker Strassen discovered the previous-best algorithm for multiplying 4×4 matrices, which reduces the variety of steps essential to carry out a matrix calculation. For instance, multiplying two 4×4 matrices collectively utilizing a standard schoolroom methodology would take 64 multiplications, whereas Strassen’s algorithm can carry out the identical feat in 49 multiplications.

DeepMind

Utilizing a neural community known as AlphaTensor, DeepMind found a technique to scale back that rely to 47 multiplications, and its researchers published a paper concerning the achievement in Nature final week.

Going from 49 steps to 47 does not sound like a lot, however when you think about what number of trillions of matrix calculations happen in a GPU day by day, even incremental enhancements can translate into massive effectivity positive aspects, permitting AI purposes to run extra rapidly on present {hardware}.

When math is only a recreation, AI wins

AlphaTensor is a descendant of AlphaGo (which bested world-champion Go gamers in 2017) and AlphaZero, which tackled chess and shogi. DeepMind calls AlphaTensor “the “first AI system for locating novel, environment friendly and provably appropriate algorithms for elementary duties akin to matrix multiplication.”

To find extra environment friendly matrix math algorithms, DeepMind arrange the issue like a single-player recreation. The corporate wrote about the method in additional element in a weblog put up final week:

On this recreation, the board is a three-dimensional tensor (array of numbers), capturing how removed from appropriate the present algorithm is. By way of a set of allowed strikes, similar to algorithm directions, the participant makes an attempt to switch the tensor and nil out its entries. When the participant manages to take action, this leads to a provably appropriate matrix multiplication algorithm for any pair of matrices, and its effectivity is captured by the variety of steps taken to zero out the tensor.

DeepMind then skilled AlphaTensor utilizing reinforcement studying to play this fictional math recreation—just like how AlphaGo realized to play Go—and it regularly improved over time. Finally, it rediscovered Strassen’s work and people of different human mathematicians, then it surpassed them, in keeping with DeepMind.

In a extra sophisticated instance, AlphaTensor found a brand new technique to carry out 5×5 matrix multiplication in 96 steps (versus 98 for the older methodology). This week, Manuel Kauers and Jakob Moosbauer of Johannes Kepler College in Linz, Austria, published a paper claiming they’ve lowered that rely by one, right down to 95 multiplications. It is no coincidence that this apparently record-breaking new algorithm got here so rapidly as a result of it constructed off of DeepMind’s work. Of their paper, Kauers and Moosbauer write, “This resolution was obtained from the scheme of [DeepMind’s researchers] by making use of a sequence of transformations resulting in a scheme from which one multiplication could possibly be eradicated.”

Tech progress builds off itself, and with AI now looking for new algorithms, it is potential that different longstanding math data might fall quickly. Much like how computer-aided design (CAD) allowed for the event of extra complicated and quicker computer systems, AI could assist human engineers speed up its personal rollout.

[ad_2]

Source link

Casino Welcome Bonus

Casino Welcome Bonus